Document Visualization with JS Divergence Matrix and Multi Dimensional Scaling

Bag of Words (BoW)

In text search and classification of text, word order contributes less to the search result or document classification unless it is part of a phrase. Therefore, it is a common practice to use the frequency of occurrence of words sacrificing the word order which is known as “bag of words”. In this document representation method, document is converted to vectors by simply counting number of occurrence of words. For example, the following two sentences would have the same vector representation: “I am who I become” and “I become who I am” as the frequency of occurrence of words are same even though the word order is different. The rationality behind of this document representation is that, the presence and count of words do matter more than the word sequence in a sentence for classification. In practice, this representation is “lingua franca” along with tf-idf representation.

Corpus

In BoW setup, corpus corresponds to the distinct number of words that covers all of the documents that we have in our dataset.

Stop Words

Words that do not contribute to the distinctiveness of either class. They could occur quite a lot like (e.g. I, am, you, they) or occur infrequently. The ones that occur a lot may prevent the vectors to weight to the words that are distinct for particular class. The ones that occur infrequently prevents us to represent the documents efficiently and compactly as they make quite long corpus vectors. Therefore, for text classification, we want to remove both the words that occur infrequently and the words that occur a lot but do not contribute to the distinctiveness of either class. A very useful heuristics for these words are the most common (5% of the words that occur most) and the least common(5-10% of the words that occur least in the entire corpus). After removing these words, we would have a better corpus and hopefully better vector representation for the documents for each class. Note that, for a given dataset, due to nature of natural language, the distribution of a typical corpus follows long tail distribution. Therefore, even if the least common words would contribute to the distinctiveness of the classes, there may be trade-off where we gain some “information” incorporating the least common words and lose the compactness of vector representation.(This brings curse of dimensionality problem which we would try to resolve by bringing a dimensionality reduction method to the table.)

After explanation of terms, let us give some concrete examples to explain how this actually works: consider following three sentences:

- I am who I become

- I become who I am

- Who am I?

Ignoring the punctuation and uppercase, we have the following corpus for these three sentences: [‘am’, ‘become’, ‘i’, ‘who’]

(note that the words are sorted by alphabetical order) Quite a large corpus! Then the bag of words representation for the sentences are the following:

- [1, 1, 2, 1]

- [1, 1, 2, 1]

- [1, 0, 1, 1]

As previously mentioned, the first and second sentences would result in the same bag of words representation even if the sentences are different. One thing to note is that since the corpus is unrealistically small, we did not stop any words but normally the contribution of auxiliarry verbs and subject pronouns is minimal, so it is common practice to remove them alltogether. The default keyword list generally includes these type of words independent from the domain for the documents.

It is easy to see that all of the documents would have the length of the corpus where the word “distribution” of the sentences would differ if the sentences are different(ignoring word order). This is all good but as we have previously mentioned, this brings dimensionality problems and lose the vector representation’s efficiency and accuracy. This is due to the nature of bag of words representation, as all of the word frequency counts toward the vector, some of the not very informative words may overcome to the words that provides distinct character to the documents in a classification perspective. In order to prevent this, we may want to use a dimensionality reduction method. If the method preserves the relative distance of individual vectors in euclidean space, then we not only gain in terms of efficiency of the representation but also prevent curse of dimensionality and a better document representation! But taking a step back, how do measure the “distance” between two documents? Let’s revisit an information theory measure for comparison of two probability distribution, namely K-L Divergence.

Kullback-Leibler Divergence

K-L divergence is a measure of the difference of two probability distributions, which is a special case of Bregman Divergence. For formal definition, if we have and discrete probability distributions, then the k-l divergence of these two distributions is defined as such: $$ D_{KL}(p|q) = \displaystyle\sum_i ln(\frac{p(i)}{q(i)}) \hspace{2 mm} p(i) $$ Needless to say, this measure is not symmetric and is defined only and sum to 1 for all possible values. Moreover, for a particular , being implies to be as well, otherwise the whole term would be undefined. Adopting this measure into our bag of words representation to measure sentence difference brings us two problems. First, we only have the counts of the words that occur in sentence, not the distribution. Second, as sentences are only a small part of corpus, there will be lots of ’s in the vector representation(would be a sparse vector). In order to mitigate the first problem, we could divide the counts of words in the vector by the total sum of the word count. By doing so, we also make sure that we have two vectors whose values sum up to 1. But second one is the hard one, how do we fill the 0’s? Luckily, this problem also comes up with in different domains and we have a solution: smoothing. There are a number of smoothing algorithms, but the one that I used is Laplace Smoothing(also called Additive Smoothing) which I will explain in the next section.

As mentioned before, this measure is not symmetric, but note that it is not related to bag of words representation per se, so we will provide another remedy for that after introducing the smoothing.

Laplace Smoothing

Behind of smoothing the distributions is to remove the ’s from the distribution by introducing small amount of factor so that they will not be but also they will not have a large share in overall distribution. By doing so, we would mitigate the implausibility of ’s with very small cost. Formally Laplace Smoothing is applied to distribution as such: $$ \hat{p_i} = \frac{p_i + \alpha}{1 + \alpha N} \hspace{5 mm} (i=1, \ldots, N) $$ where is the smoothing parameter and is the number of discrete terms in the distribution.

> Note that without , is as expected and > adding for every parameter would not change the sum of the > distribution as in the denominator, we divide by .

Very well, we removed our ’s and have “smooth” distributions. However, what kind of measure is this K-L divergence if it is not symmetric? Think about it one second, difference between sentence A and sentence B would be different than difference between sentence B and sentence A. How ridiculuous is that! Luckily, we have a solution based on K-L divergence; Jensen Shannon Divergence which will be explained in the next section:

Jensen-Shannon Divergence

J-S Divergence builds itself on top of K-L divergence promising it is symmetric; which is nice and quite popular measure of two probability distributions. Formally it is defined for and distributions as such: where .

Instead of going through getting K-L divergence of individual distribution, J-S does this using a mixture distribution of . Quite smart, huh? By doing so, it ensures that it is symmetric and nice measure for probability distribution differences.

J-S Matrix

Say we have documents and we constructed our J-S matrix using J-S Divergence for each pair of smoothed bag of words of documents. This would result in matrix where the is the size of our corpus as every document is represented vectors. This matrix representation would not solve our curse of dimensionality problem. So, let us introduce a dimensionality reduction method(among many others); Multidimensional Scaling.

Multidimensional Scaling (MDS)

Over-simplified idea behind MDS is that if we could find an embedding which has a significantly lower dimension for high dimension space and preserve the distance between observation pairs, we do not lose much in relative sense since we are keeping the distance between the pairs. Further, we both reduce dimensionality quite a lot and preserve the relative distance to each other. This is somehow different than traditional dimensionality reduction methods where they they generally scale individual observations or all of the observations altogether. MDS seeks to fit a lower embedding for observation pairs. Since the J-S divergence deals with document pairs, J-S Divergence matrix could be considered as a dissimilarity matrix for the documents in that sense. Therefore, it is a perfect fit for MDS as MDS also tries to reduce the dimensionality of J-S measure between two documents. We want to find 2-dimension lower embedding in order to visualize the documents in a scatter plot but 1-dimension works as well as any number of dimension could be used for MDS. Formally, loss function of MDS is defined as following: where is the dissimilarity matrix where is the number of observations and is the dimension of the original space. We are not going to show how the minimization works, but for a lower embedding(say 2), the minimization function could be optimized through gradient descent.

Result

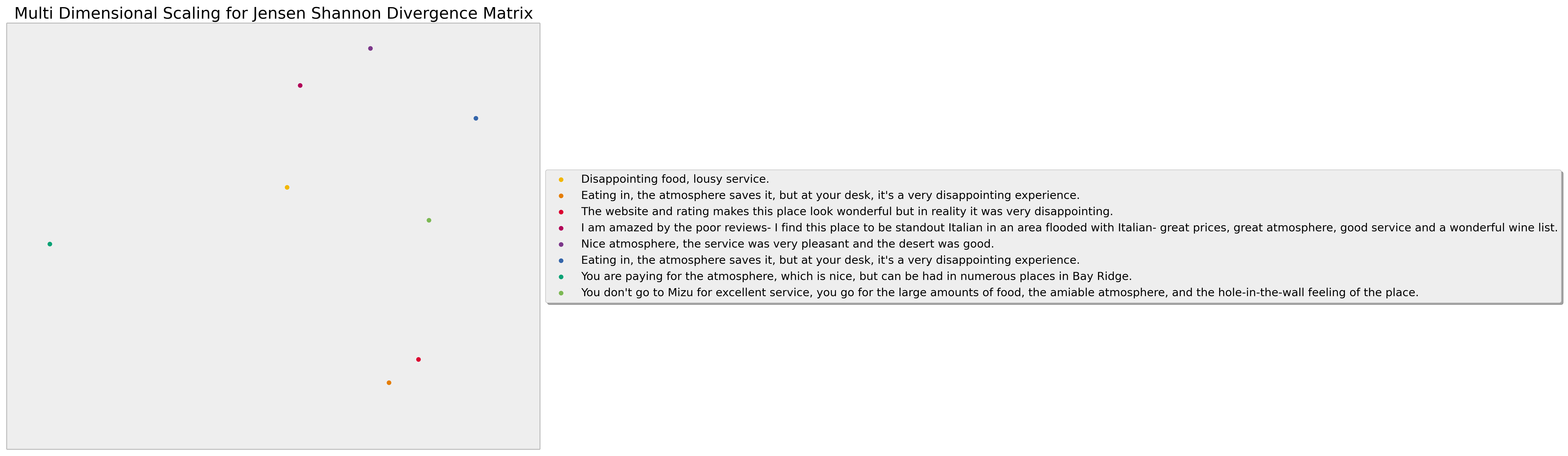

After we apply MDS to our J-S divergence matrix, we get - pair of coordinates of each document and it looks like the following(for a selected documents):

Data is from Yelp’s reviews of restaurantts and first three sentences are negative and mention about how disappointing their experience was with restaurant. The rest of them are generally positive and even if their experience may include some negativity, their overall experience is quite positive.

As you could see from the scatter plot, the grouping of these two different class are somehow separate even though the documents are quite short and overall corpus is only 8 sentences.

Possible Improvement

We talked about stop words but curating stop words is manual and laborious work. Further, it generally evolves to be domain specific as you incorporate more and more stop words. Instead of using stopwords, one may choose feature selection methods to choose the words from either classification accuracy or some information theory measure from the training dataset. This not only eliminates the stop words curation step alltogether but also increase the efficiency and compactness of the bag of words representation. As a result of this, when some dimensionality reduction method is applied to the vectors, they would position themselves better.

What is next?

An interactive app that allows user to type text and then visualize every sentence in a scatter plot. I have a working version of this, but it needs some polishing.