DynamoDB Learnings

At Hinge, we have been using Dynamodb in production for more than 8 months and we just relaunched two weeks ago with full capacity. I want to share couple of learnings and why it made sense for us to store ratings in DynamoDB since I own the rating processing in the application. We are processing millions of ratings per day, upto so far, DynamoDB is holding pretty good so far. They are also crucial for our recommender to get smarter, so care is very much needed for ratings.

DynamoDB

It is a NoSQL database which is very similar to other NoSQL databases like Mongo, or other key-value storages like SimpleDB, but it is also different in various ways. These differences include a bunch of advantages and disadvantages.

Advantages

- It is managed and hosted by AWS. You do not need to setup any infrastructure. AWS takes care of everything in terms of managing this database for you.

- It is very easy to setup the database; you only need database table name and throughput numbers to get started(not so much about

partition-rangekey design). You want to create yourcloudformationtemplate for reproduciability and key schema design so that you do not have to go through AWS web interface. - It supports triggers through AWS Lambda; if you want to take an action or fire an event from another application, you can do that through this trigger support.

- Integrates very well with Redshift(in general with other AWS offerings); you could load data into Redshift with a SQL command like in the following:

TRUNCATE REDSHIFT_TABLE_NAME;

COPY REDSHIFT_TABLE_NAME FROM 'dynamodb://DYNAMO_DB_TABLE_NAME'

CREDENTIALS 'aws_access_key_id=$AWS_ACCESS_KEY_ID;aws_secret_access_key=$AWS_SECRET_KEY_ID'

TIMEFORMAT 'epochsecs'

READRATIO 10

;If you pass TIMEFORMAT, then it recognizes the datetime field and automatically converts to the timestamp. It works for epochmillisecs as well. READRATIO is what percentage of read throughput is this command is going to use when it tries to export the data.

- There is no seemingly upper bound on how much scale you could get. The scaling is done by provisioning various

- You pay for your usage or throughput, not the storage. I think this is a huge gain especially if your dataset grows very large quickly. If the most data becomes stale over time(never accessed), even better.

- Availability/Durability: all data is replicated to different availability zones by AWS, works like a charm.

- Scalable/Fast: they advertise the database “single-digit” millisecond in terms of speed and it definitely is fast. If you grow the database(say 1 Billion items), it still is single-digit millisecond. This is

nice. We are Python shop and usingbototo access the database, it averages 8 milliseconds for reads and 9 milliseconds for writes. We are happy about the performance so far. - What they offer is actually not a database; it is an API which does database operations. Even RDS offerings of AWS, you need to do some configuration where you do not need anyhting in terms of operations for DynamoDB.

- It supports consistent reads. You can also do eventual consistent reads which are twice cheaper. It is flexible around consistency.

Everything is great, then? Not really, there are some things not so great about DynamoDB as well.

Disadvantages

- Lock-in. DynamoDB is not open-source and the time you start using the database, you are locked in the AWS.

- Even if it is very easy to setup the database, you need a good data model around

hashandrangekey schema in order to utilize the database in a cost effective manner; especially considering you are paying for throughput, you do not want to do scan operations in the database. - Dynamodb is not feature-rich in terms of how you index your data. You need to be cognizant of how you are going to query and write the data into database. Unlike Mongo, it does not support multi-indexing, you cannot add arbitrary indexes after you created the table. You cannot index the columns arbitrarily. You need to have a proper data model, you need to come up with a proper plan how you are going to read and write the data into DynamoDB with your

hashandrangekeys. Spend time on designing data model before you even consider using the DynamoDB.

- The single record cannot exceed 400 kb. If your data record is going to increase over time, be cognizant on this limitation.

- It puts limit on the total size of records(1 MB) when you want to query the database.

- Documentation is not very straightforward and I do not like the API

boto3offers. You need tobatch_write_itemoperation even if you want to delete a batch of items. Also, the keyword arguments and parameters you are passing is not very Pythonic. - Native data types are limited, there is no

datetimesupport. You may want to convert the datetime into epoch seconds or milliseconds and store them as aNumber(which isdecimal.Decimalin Python) - There is no automated provisioning for throughput. Everyhing around on this one is handled by AWS, but not this. There are third party libraries(like

dynamic-dynamodbthat does this for you, but we have not used it. This could be considered something not a bug but a feature. Because what happens is that, you end up overprovisioning the tables since aumated way is not supported, where you end paying more than you need, which is good for AWS, not so great for the user. - You can downscale provisioned capacity only 6 times per 24 hours. Similarly, it could be a feature not a bug.

- Once you hit the provisioned rate limit, all of the consecutive requests are going to fail, which is not great. You need to be careful what you provision in terms of read and write throughput.

- Tooling around the database is not great; you do not know how many machines are running, if your

hashkeys are uniformly distributed(I will explain in a bit what this means). Monitoring could be done only through Cloudwatch, which is not great. - Writes are five times more expensive than reads. Something to consider if your database operations are write heavy.

- You cannot remove all of the records in the database. There is no operation that is similar to

TRUNCATEin the sql world. When we were doing load testing, we were wiping out the database and you could wipe out all of the records if you know what all of the partition keys are. If you do not have the partition keys, you can delete and recreate it from start.

Our Usage

We have ratings which is actually a person’s action on the other person. Currently, we have five allowed ratings; a person could block, report, skip, like, note another person in the app. This is not a rating per se, but this is part of our company vocabulary, so you are going to stick with that for the rest of the post. We want to store the actions of these people in order to recommend and not recommend people to each other. We also want to store a timestamp(created_at) when this action first created and updated(updated_at) in order to make a decision if we want to find out who the “first actor” is or “second actor” is. Another thing we want to store is the action itself of course which we call state which is an enum maps various actions into integers. Also, since we are allowing content like in the app, we also want to store that piece of content as well as content type(content_type).

Design and Plan

We used to have MongoDB for our legacy rating table and that had a few issues when we have 1 TB of data, the query times got longer and longer over time and it was not performing well(to put it lightly). We were looking at different solutions at that point and there were two main alternatives we were looking at; Cassandra and DynamoDB. We eventually chose DynamoDB because it is hosted and managed by AWS(we did not want to go through the same thing with MongoDB),but Cassandra was as performant as DynamoDB for our use case. We did the testing for both databases in large number of records(1 Billion Items) and we were happy about the performance of both databases.

We call the person who is acting player and person who is being acted as subject. In previous version of Hinge(Hinge v3), we were doing reads per pair of people to be able to see what their states are to process the new rating. We were also querying the database per player only to be able to generate ineligible people for the person, which means the people whom the player rated(exception being is the skip). That means we are going to two different read queries player_id and player_id-subject_id. This is great news! Because we do not need a whole bunch of indexes and if we can do two indexes which is kind of hierarchical(we are not going to query per subject_id, but always depend on the player_id), then it is all good.

DynamoDB allows you to define a hash or partition key and we can map player_id to be hash key and subject_id to be range key pretty easily. Since subjects are always going to be under the umbrella of player_id, it fits naturally to key schema that DynamoDB provides and which is exactly what we did following the guideline.

| Partition key value | Uniformity |

| User ID, where the application has many users. | Good | As long as the guidelines is concerned, we are using user id for the partition key and another user id for range key; should be good.

We could have a single partition key which combines player_id and subject_id, but that required double writes for our use case since the states are not bidirectional. And if you remember writes are five times more expensive than reads, we opted in read query one more time to do the rating resolution rather than double-insert. Rating resolution is to figure out if the two people should be a connection or not.

One problem is that, DynamoDB does the splitting(under the hood) based on the partition key and power users who like to rate a lot of people will have splitted partitions when the partition gets very large in terms of the total data that is being stored(larger than 10 gb), which will reduce our throughput. In these cases, the most optimal solution to somehow determine power users and inactive users so that you could assign them into the same partition in order to distribute the total data size uniformly within a single partition so that you would hit that 10 gb limit. However, we have not done this portion in this part of the design process, but it is something we keep an eye on.

AWS does not provide a way to introspect the partitions, I wish there is a way to introspect(the total number of items and total size of the partition) to see and understand a little bit better what is going on under the hood.

Data Model

| player_id | subject_id | state | created_at | content | content_type | Where player_id, subject_id, ’contentandcontent_typeare strings andstateandcreated_atare Numbers. We thought having a local secondary index onstate` as well to produce ineligible list(which I am explaining in the next section), but we were not happy with the performance.

Ineligible People

We produce an ineligible people list every time when we generate recommendations for our users. This is done by querying subject_ids of player_id from DynamoDB and putting into Bloom Filter before passing to the recommender service(we have SOA). This query is done against player_id and very fast. Its payload is going to be large as time goes by, but nowhere near to 1 MB in near future(one needs to rate 62500 people since uuids are 16 bytes). Even so, we can do the query in two steps and it should not have bad performance characteristics.

Reads per pair

Reads that are done against player_id-subject_id are very fast as well as the writes. This was very important as we are processing very large volume of rating activity per day and even shaving 100 milliseconds would benefit the whole system. We do rating resolution every time when we process a rating which is like a FSM in order to figure out what the next state should be between people. We are doing an extra read in this operation since we are not double-inserting them into the database when we write the pair into the database.

Load Testing

We of course did a load testing since we knew this database is going to get large very quickly and we did not want to have the similar problem in the early version of Hinge. What we did is that we sampled a bunch of ratings and filled a DynamoDB with 1.2 billion records and see what the performance of those queries would look like with that size.

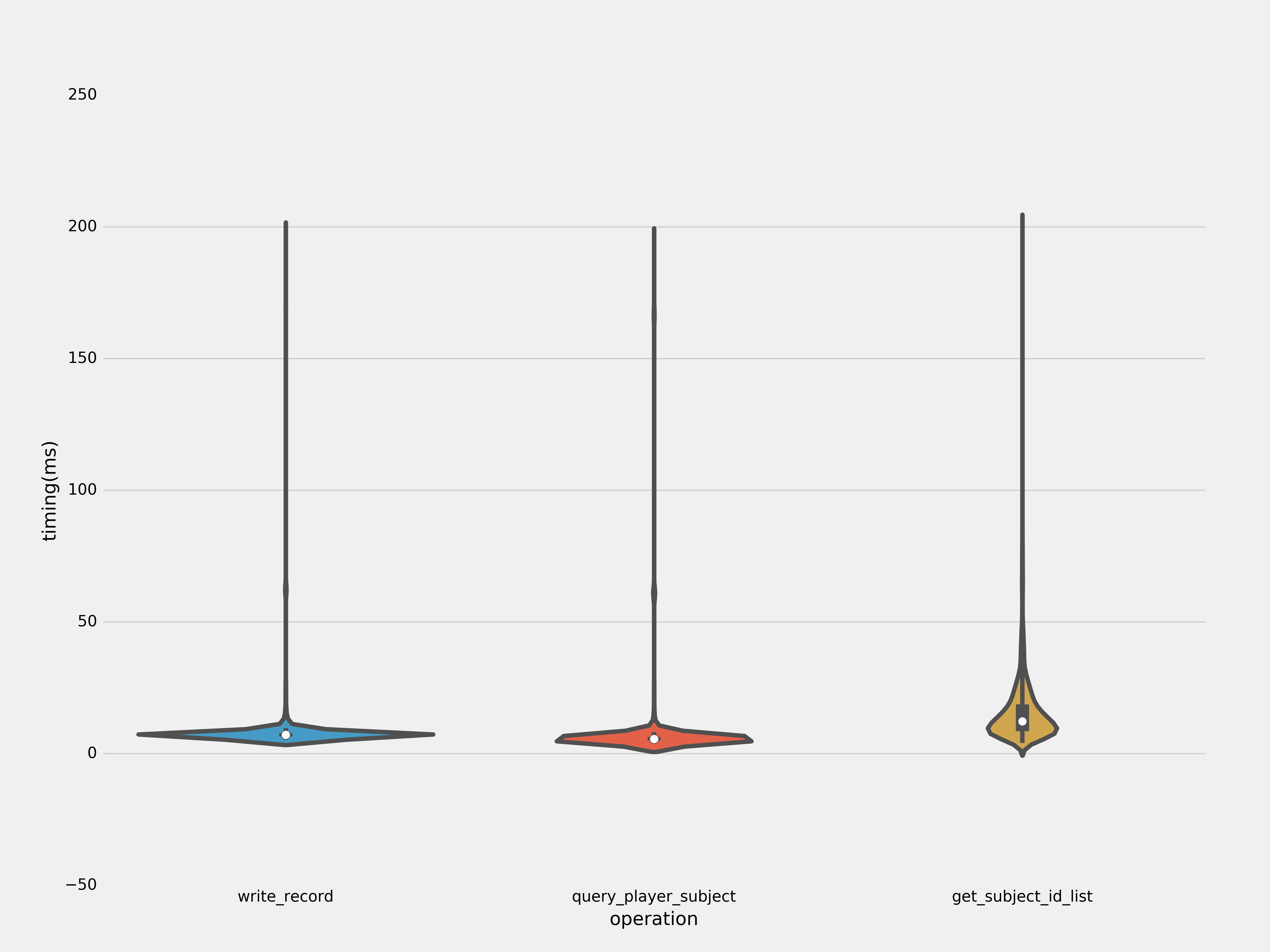

Timings in millisecond in the above graph and write_record signifies a single write timing, query_player_subject is read operation for player_id-subject_id and finally get_subject_id_list is the list of subjects that player has rated.

The timings were pretty great as you can see from the above graph. On average reading player_id-subject_id which is the busiest lookup operation takes around 9 milliseconds and write was also in the similar timing range(8-9 milliseconds). The construction of bloom filter takes more than 15 milliseconds on average for that volume, but not even close to 20 milliseconds.

One thing is that, the timings have pretty long tail and I cut around 200 milliseconds.

Possible Improvements

If we were to store the both parties’ actions into a single rating record, that would be better in terms of number of queries are done against to the database, but I am not sure how much we would lose efficiency in terms of payload size. Right now we have connection object which is kind of two ratings merged into a single object. Also the bloated(already) state enum would become more complex since the states need to encode 3 or 4 actions.(it encodes at most 2 actions right now). We can combine all of what happened into a single record, that would be much efficient and better in terms of query performance. However, I am not sure if that solution would be cleaner and better than what we have right now.

Performance in the Wild

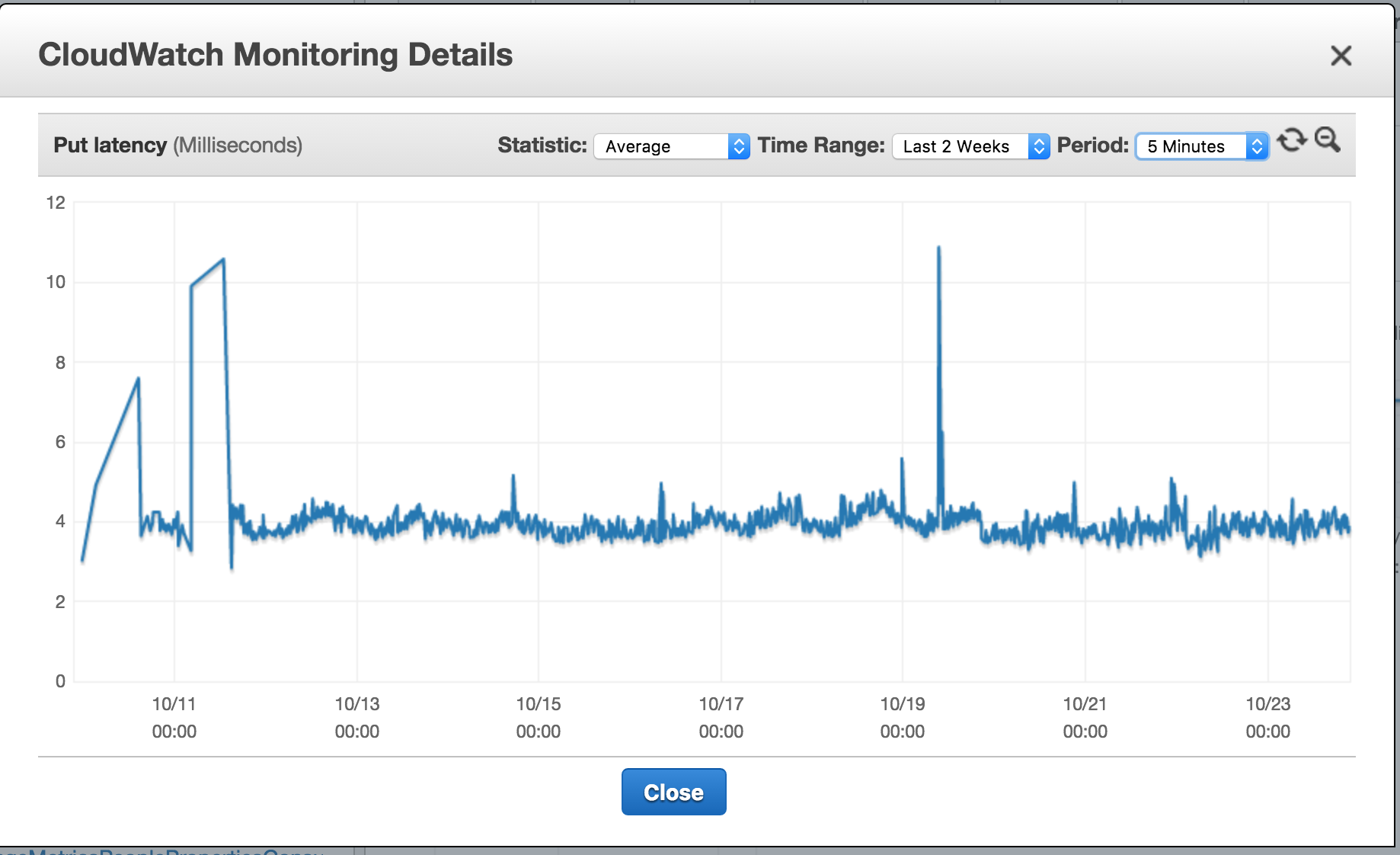

It has been two weeks DynamoDB in the production since global relaunch. I have of course graphs(CloudWatch, albeit)

Especially, in the launch day, we knew that it was going to be crazy because of the PR and push. So, we overprovisioned pretty much everything in terms of infrastructure. DynamoDB was no exception.

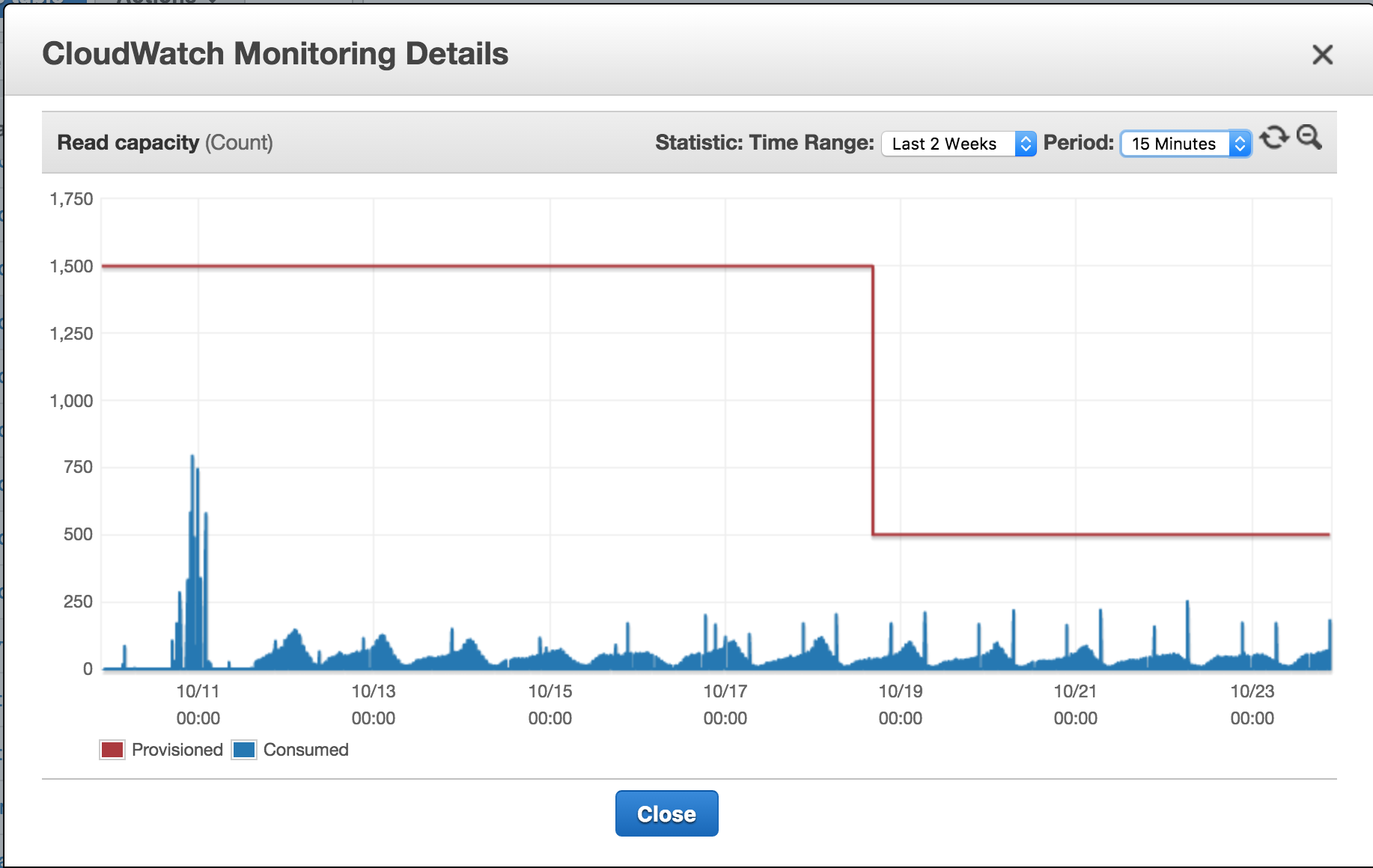

Read Capacity

Except the first day, the graph looks pretty much cylical and periodic which is expected. We downscaled since we are not using much of our provisioned throughput after some time.

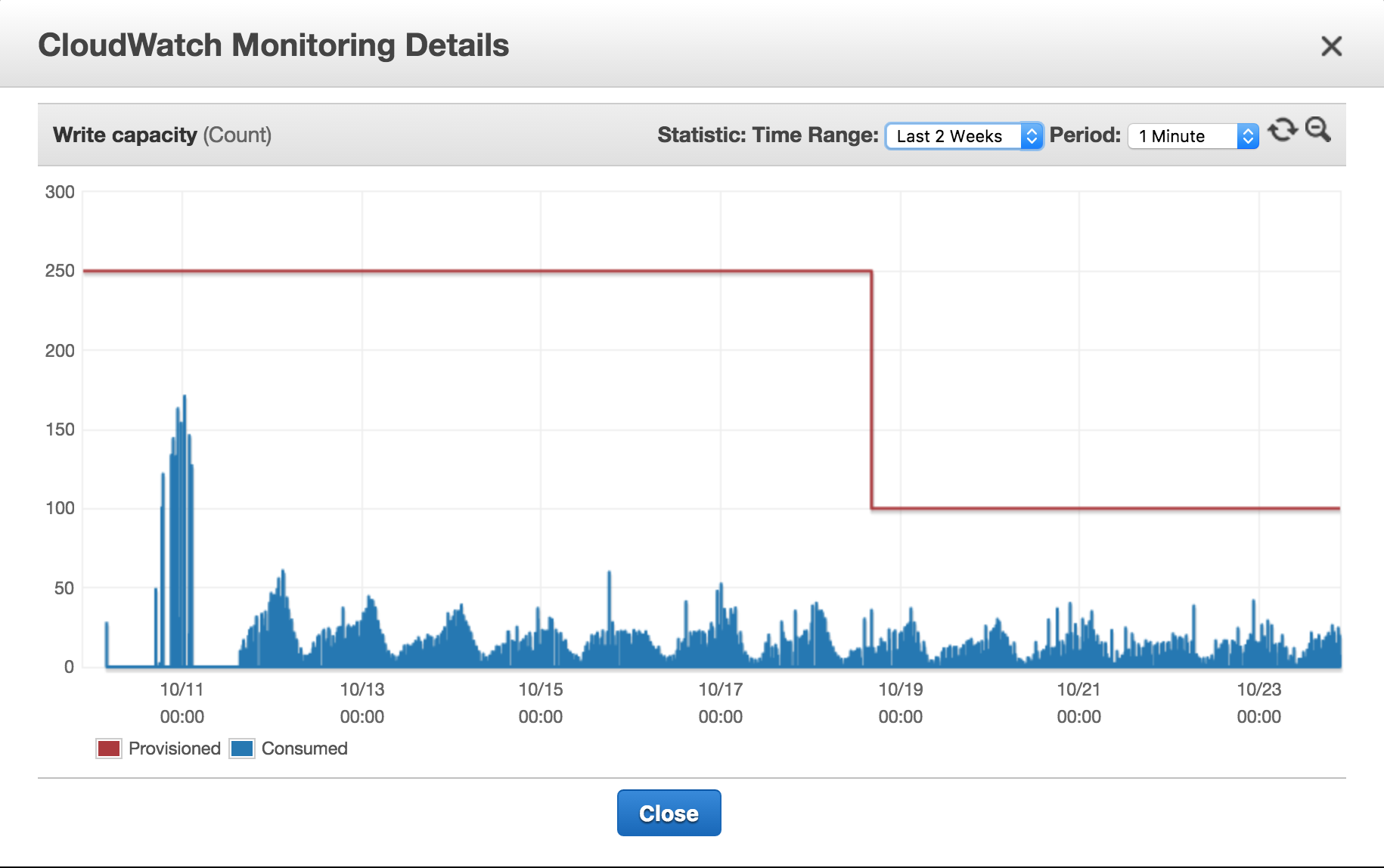

Write Capacity

Write capacity follows read capacity similarly. Especially with user activity, both reads and writes are increasing and that is being reflected consumed throughput.

So, what about the performance? At this point, we have many million ratings and large volume of ratings happening in every second.

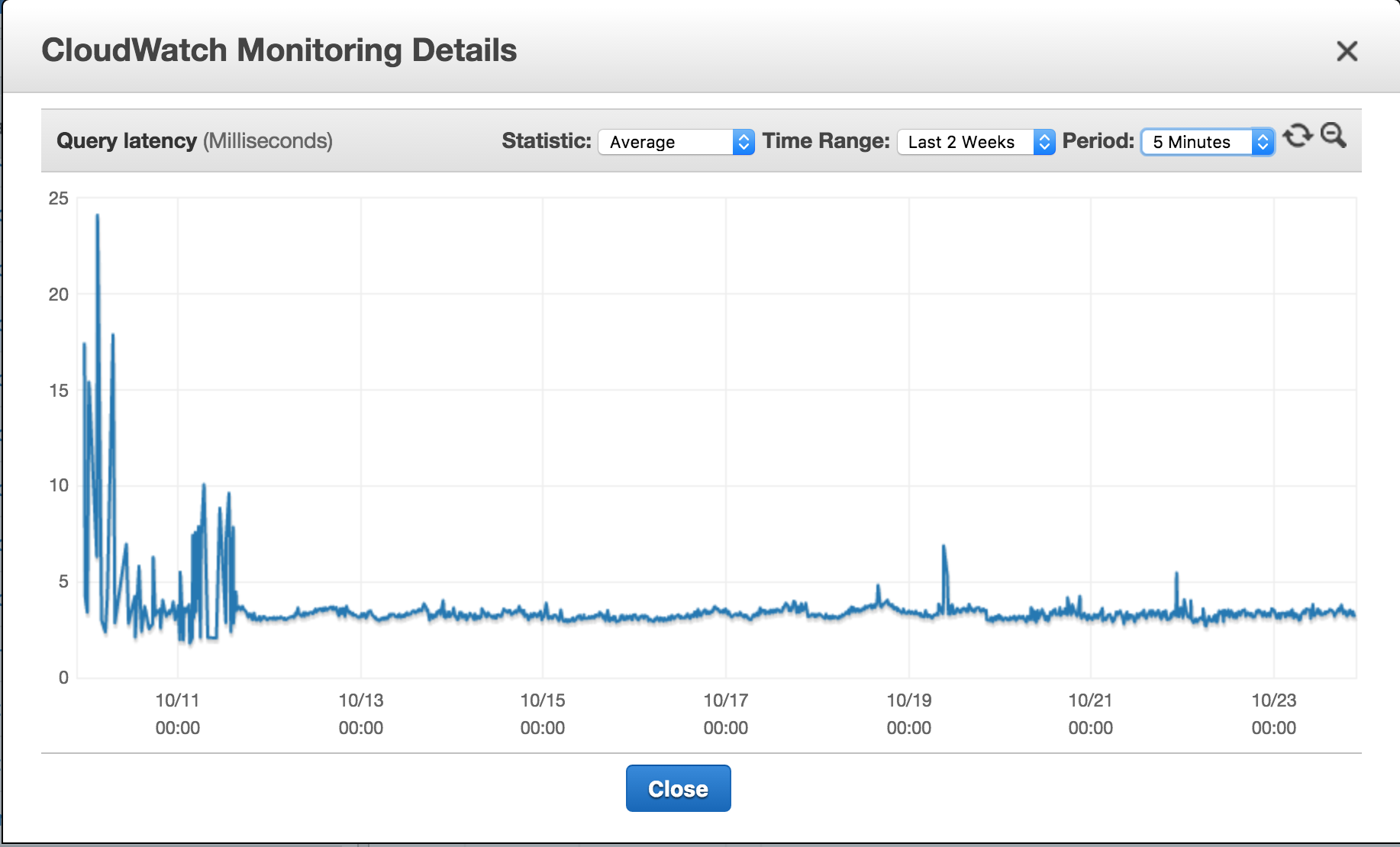

Read Latency

Except the first day(the activity bursted on that day), DynamoDB provides single digit millisecond consistently. What is amazing is that its performance does not degrade over time because of how they partition and do the request routing for a single query. We do not experience less than 4 millisecond timings from the application, though. We experience 8-9 milliseconds from the application.

Write Latency

Writes are more stable than reads even around the first day.

Conclusion

In software engineering, you can make technical decisions based on a number of factor things: how much you know about it(I do not know anything about DynamoDB, we should not choose it), how bad your bias is around it(MongoDB is terrible, everything about that db is just terrible), how much you understand(I do not know any SQL, so we should go with NoSQL, better yet, MongoDB), how easy it would be to implement(SQLAlchemy already provides a connection to Posgresql, let’s go with that one), how much code do you need to remove(I already wrote a wrapper around DB A, we should not go with DB B), how much you are interested in that area(DB is not my area, let’s implement some solution and move on) and so forth. All of the decision factors yield a suboptimal solution for the problem you have because your decision around that decision does not necessarily address the problem at the moment, but rather your interpretation of what the problem is.

Looking back, I think this is one of the things that I am extremely proud and will remember at my time at Hinge, not because the technology was cool(which kind of is), but we did our due diligence around the technology. We examined our needs, we knew what we needed from the database, we did load testing and scalability testing before dedicating ourselves fully. All of our ducks were in a row, when I start implementation on this one, we have not had any slight concern around the database capability since everything was so well-planned and therefore predictable. We kept an open mind since we did not want to experience putting out fire on the database when you have large amount of load. I am not saying we did not have any biases, I think we had a huge bias which made us choosing DynamoDB; where we really did not want to host and manage the database ourselves. At least, we acknowledged it and we knew about it. It did not get lost in translation.

When Mise en place is in action, the only thing is needed crafting and implementing the solution is itself, which is the easiest part.