Product Recommendations

As Jet.com focuses more and more on the grocery category, we start facing certain challenges around inventory availability. To overcome this challenge, we came up with a new module which would provide substitutable products on the item page. This post explains the motivation (why), design, implementation (how), and the product (what).

Why is this important?

We want to provide customers with the most similar items so they can purchase a suitable substitute when the original product they’re looking for is unavailable. Previously, the OOS(out of stock) item page experience did not include any substitutable products on the item page in case the customer came to the item page through the reorder and/or search pages.

Design Decisions

We want to make a couple of assumptions and design decisions prior to this project with the goal of solving our substitute problem. This means that we want to recommend/suggest products that are substitutable to the anchor product. This also means that we should focus on our catalog and recommended products that are available from the catalog. We want to provide recommendations as long as they are somehow related. If we do not have any blackberries in the website, then the user can still see blueberries, raspberries or strawberries. This means that we are not going to restrict ourselves for a given product type but provide a wide selection of products even if all of the products are not directly substitutable for the anchor product. We want to generate recommendations for all of the products in our catalog regardless of their availability status. We are not going to constrain ourselves over inventory availability of the product. This only makes sense as we are trying to tackle and solve OOS item recommendations. We want to provide product similarity based on product data rather than behavioral data. At least initially. This means that we depended on the product data and not use behavioral data. There are multiple reasons why we did this:

- Behavioral data does not translate into substitutability of the product.

- Behavioral data does not necessarily tell anything about the products themselves, but rather the usage of the product.

- Behavioral data is impacted by seasonality, pricing, discounts, brands, zip codes, and intent of the users.

- Behavioral data is heavily biased towards products that have been on the site for awhile and have a high sales rank.

- Behavioral data may be biased toward certain brands.

How do we use the product to be able to generate recommendations? Product Data We have the following data for a given product:

{

"id": "9fb0c9b4a7a84c0ca90d5e38a0b4352d",

"title": "Bananas, Minimum 5 Ct",

"description": "Your order will contain a minimum of 5 bananas. Sweet with a creamy texture, bananas are convenient for snacking, slicing into yogurt or ice cream, and using in many recipes. Bananas are an excellent source of vitamin B6, potassium and fiber.",

"bullets": [

"Storing: Refrigerating or freezing",

"Ripen: Placing near other ripe fruit in a bowl",

"Extend: Pulling the banana apart from the bunch",

"Enjoy: Bananas can be enjoyed as a great healthy on-the-go snack, as part of a meal or desert, in a milkshake, with cereal or in a banana split"

],

"product": [

"banana",

"fruit"

],

"brand": "Fresh Produce"

}The values of the fields may change, but the keys for most of the part exist in most of the products in catalog. However, we want to represent this textual information in such a way that we can compute similarity between different products. This brings us to our next topic - the representation of the products.

Representation

In order to compute a similarity measure across all of the products, we need to come up with a representation model for the product data. In this section, we are going to give background on text representation and explain the chosen method to represent the product.

Vector Space Model

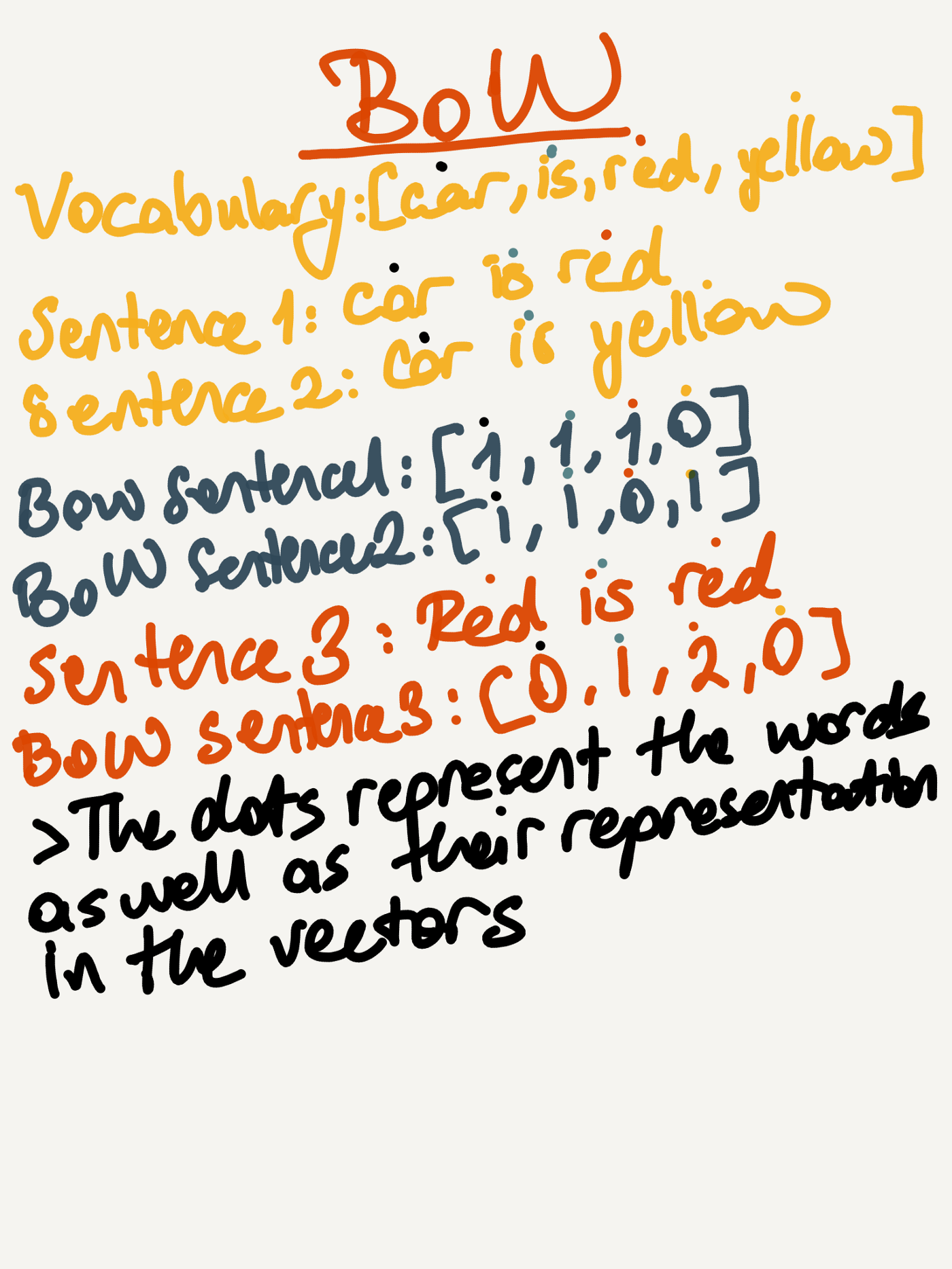

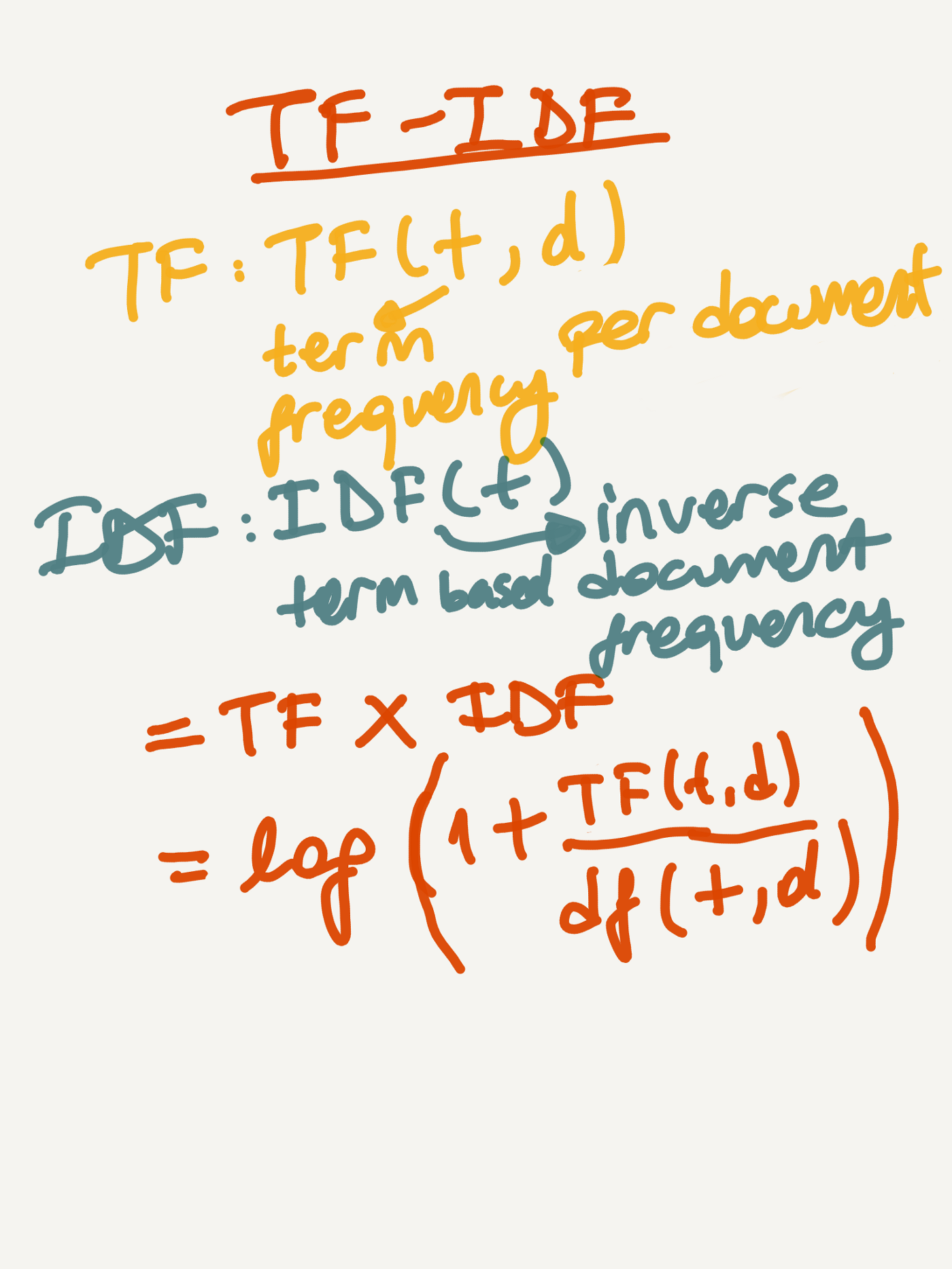

Vector Space Model is the main model of representation of documents in a vector space. This allows us to compute similarity measure between documents. Implementations such as Bag of Words(BoW) and TF-IDF builds on top of vector space model idea and try to come up with ways of representing the documents in a vector space.

Both of these methods try to solve sentence representation problem as the words are represented as either integer (term frequency in sentence, BoW) and floats (term frequency multiplies with inverse document frequency, TF-IDF) Advantages of these approaches are: They are not computationally expensive We do not need a large corpus to represent the vector models

Disadvantages include: It produces sparse vectors over a large corpus It can only represent the words as counts or its existence vs non-existence It does not know the meaning of words. Negation is not represented very well. For example: “He does not have a good sense humor.” vs. “He has a good sense of humor.” These sentences will have a similar bag of word representation even if they are negating each other. They are not aware of word order within a sentence.

Word2Vec

The motivation behind word representation is that this is a very fundamental task for a number of natural language processing tasks. An efficient/relevant word representation independent from the task and context of the problem will be useful to solve the original NLP problem in an easier fashion. Word2Vec is another method that tries to solve the word representation problem. It is similar to TF-IDF and BOW, but with a significant difference - it actually tries to learn embedding for each individual word rather than the sentence representation. This paper and its follow-up paper show how to train large word2vec model over a corpus to get a distributed representation over words in the corpus. The original paper was a breakthrough in terms of the novelty of the word representation as well as what the embeddings can capture in terms of semantically. For certain cities, it captured the relation of the city being capital. For certain attributes, it captured the gender of it.

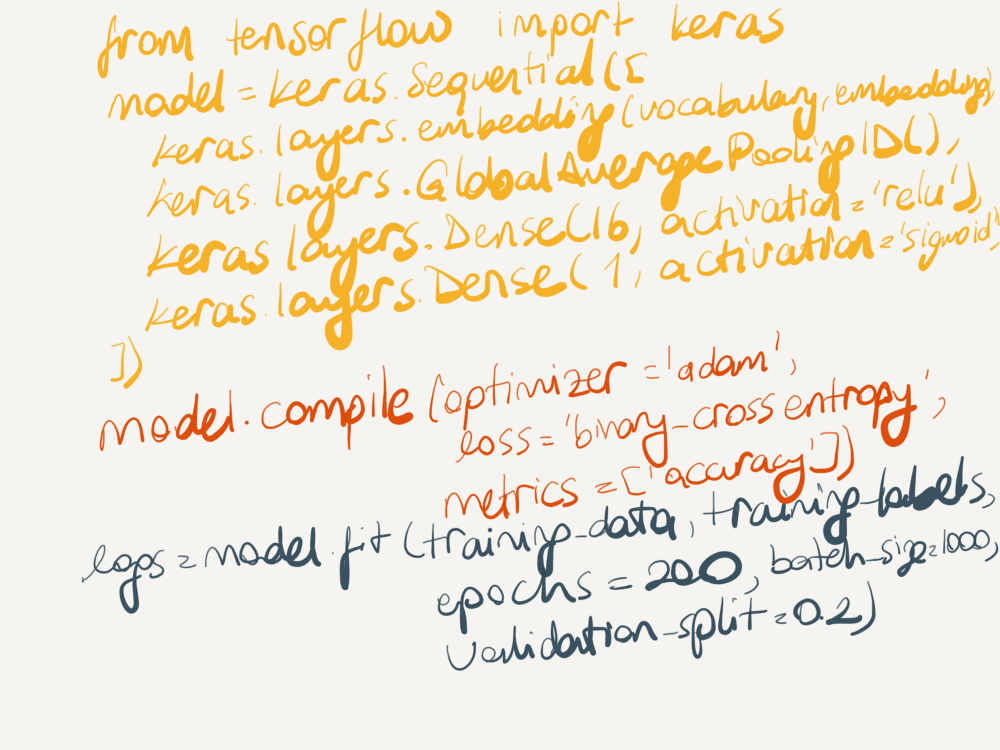

It is very easy to implement a shallow neural network in Tensorflow using Keras interface for word2vec purposes. Here, we are creating a two-layer neural network with an embedding layer, which has the size of vocabulary and embedding_size. After the training, this layer is going to be a lookup table on top of the words to be able to get the embedding per word.

Advantages of word2vec

- The vector representation is limited to a certain embedding size. This allows us to represent the documents/products much more efficiently.

- The words themselves have “meaning” and a vector representation. They are not a single number based on occurrence and independently treated based on the context, but rather words themselves can carry some meaning and can be compared with other words.

Disadvantages of word2vec

- We still have problems with words that are out of the vocabulary. If the word is not in the training dataset, we would not be able to generate an embedding for the word. For this shortcoming, we used an unknown character to use an embedding that represents those words.

- Since it is not character level but word level, it cannot learn different forms of the words even if they appear in the training dataset.

- Misspelled words are treated as unique words in the corpora.

- Rare words’ embeddings are not generally very useful in order to represent the words themselves.

Training

We took a trained model on Wikipedia corpus and retrained it on our catalog where we used the titles, bullets and descriptions as inputs. This allowed us to use a baseline model to have coverage on the words that appear in a large corpus and retrain on our catalog so that we can tailor the embeddings for our catalog. We found out that in the evaluation of the model, the CBoW (Continuous Bag of Words) approach in the optimization produced slightly better results than the skip-gram in our evaluation set.

Product2Vec

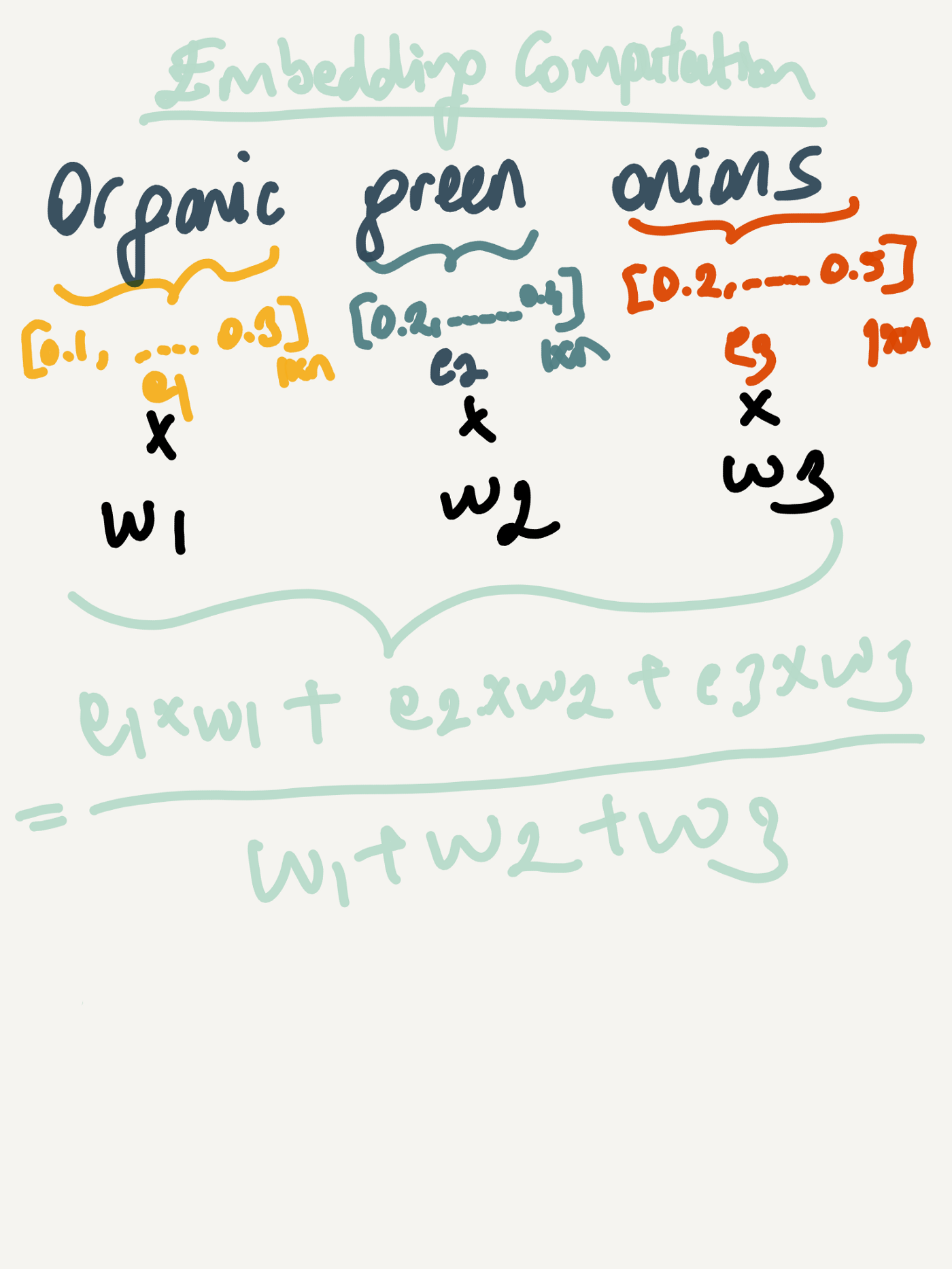

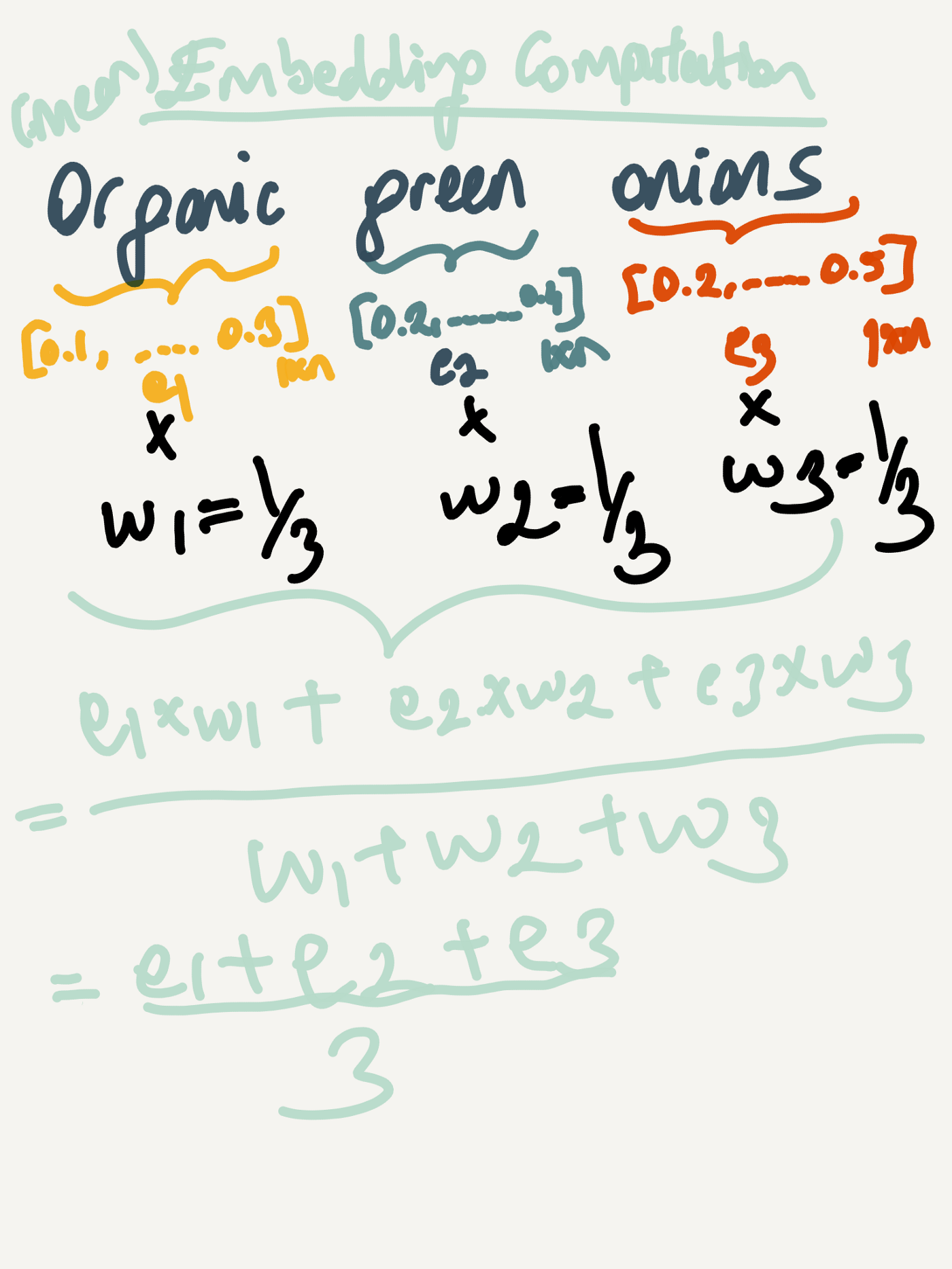

We ultimately want to represent the product into an embedding. For each word, we use tf-idf weighted embedding representation as in the following:

This type of representation gives more weights on the words that may not appear in the corpus as much and gives boost for brand names for a given category as we are computing TFIDF per category. We also tried averaging all of the word representation equally weighted as in the following:

However, averaging without considering tf-idf resulted in lower classification accuracy. Therefore, we used tfidf weigted vector embedding for a given product per different fields of the product data.

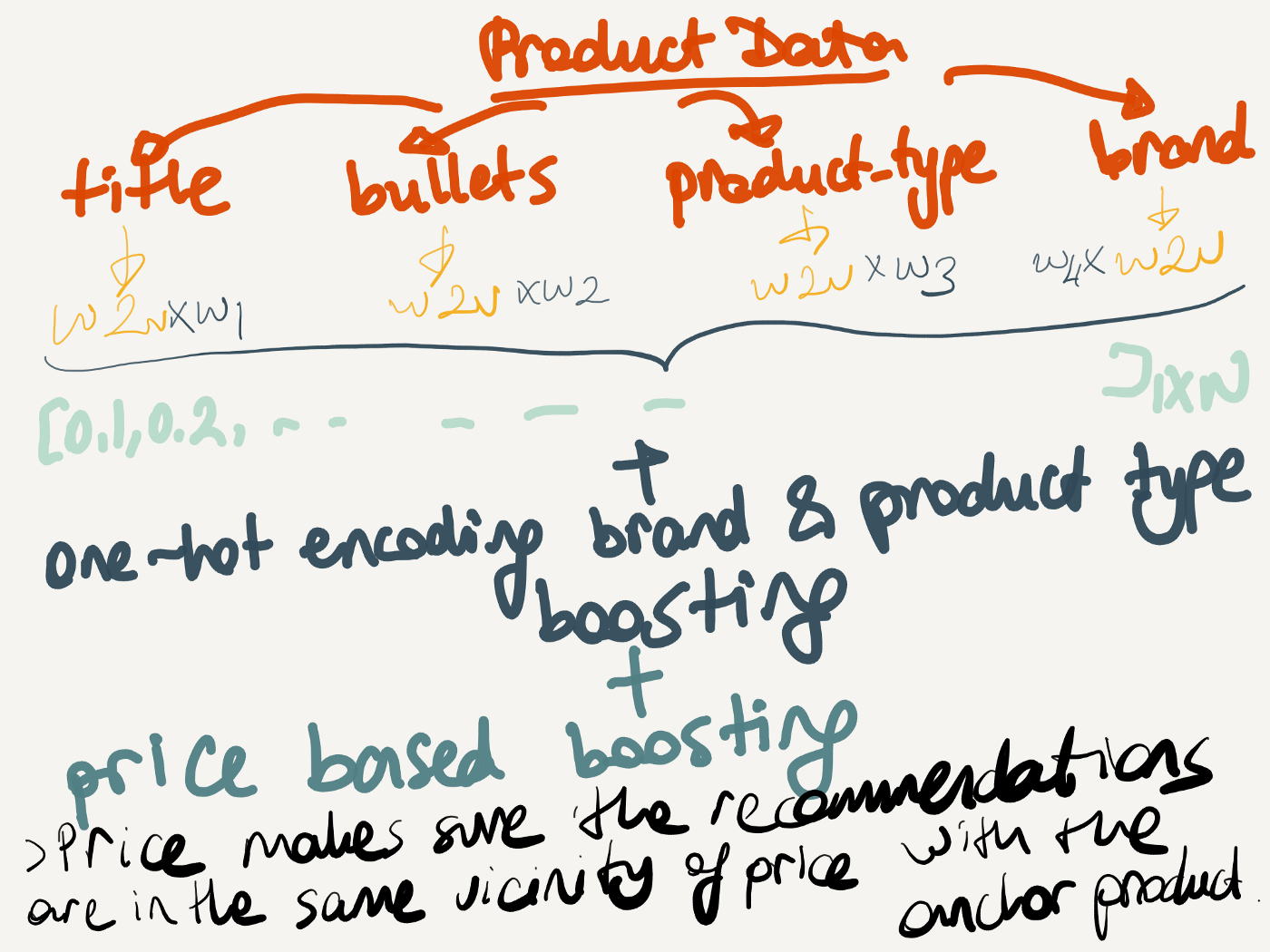

Overall, the embedding generation is outlined above. We learn w1, w2, w3, w4 by a hyper-parameter optimization through cross-validation. One-hot encoding brand, product type and boosting can be considered as a rerank step where if both of the features are matching, they get prioritized both over and across brand and product type. Price-based boosting is only enabled if the anchor product and candidates for recommendations differ more than 20% and are in certain categories (like electronics, fashion).

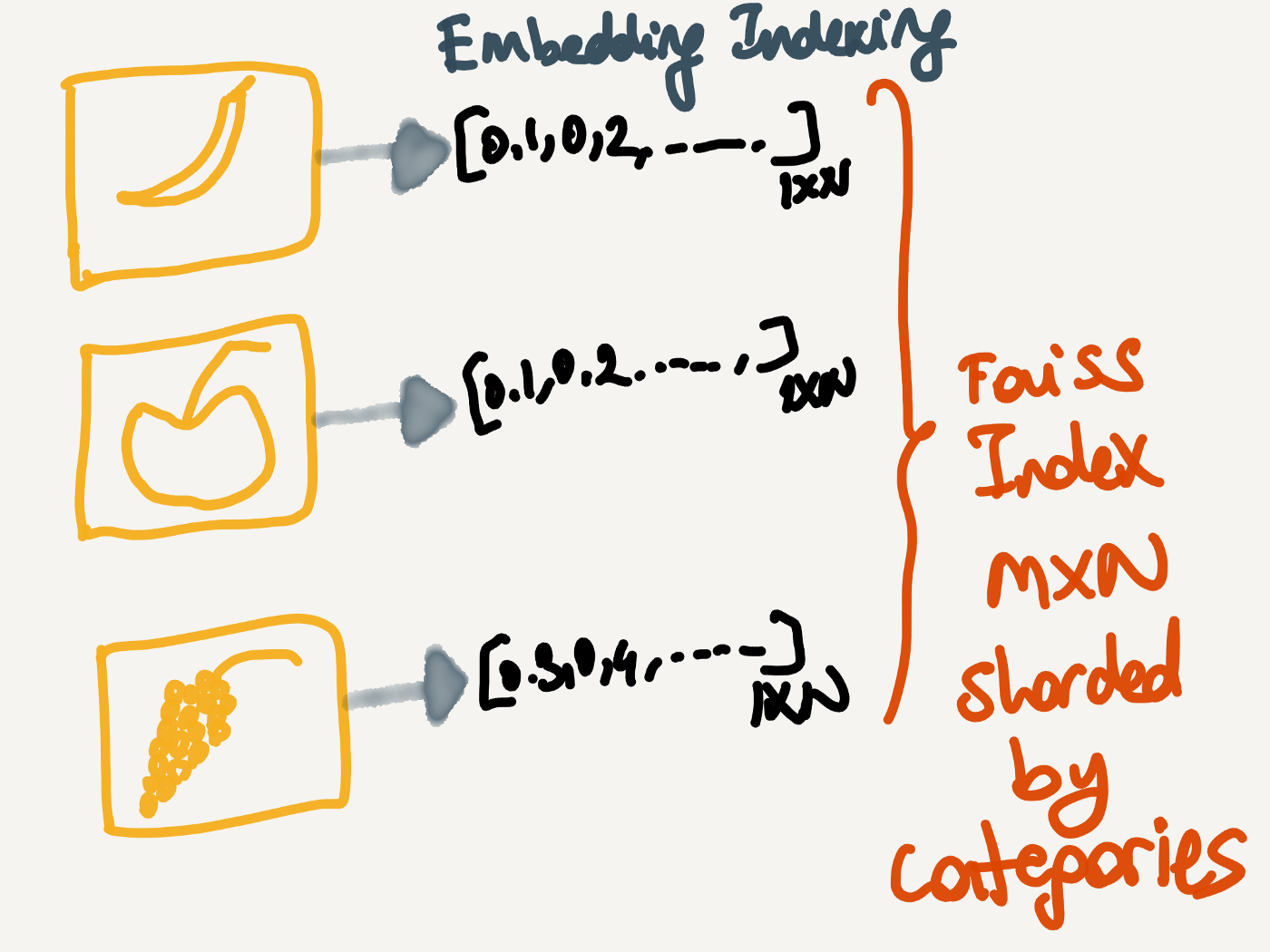

Indexing Architecture

After explaining how we generate embeddings, indexing architecture becomes quite simplified. We use FAISS to store embeddings and compute similarity between different product embeddings.

Since our candidates for recommendations are limited to category, we shard FAISS by category to make the indexer as well as the computation of similarity across embeddings. In the recommendation generation, since we know the category of the product, we are able to hit sharded FAISS with ease for a given product. After we get all of the candidates for a given category, we search the product in the FAISS category and generate recommendations based on the closest distance. After that, we store these recommendations in DocDB keyed by the anchor product. In the API side, we lookup based on the anchor product and provide the recommendations to front end.

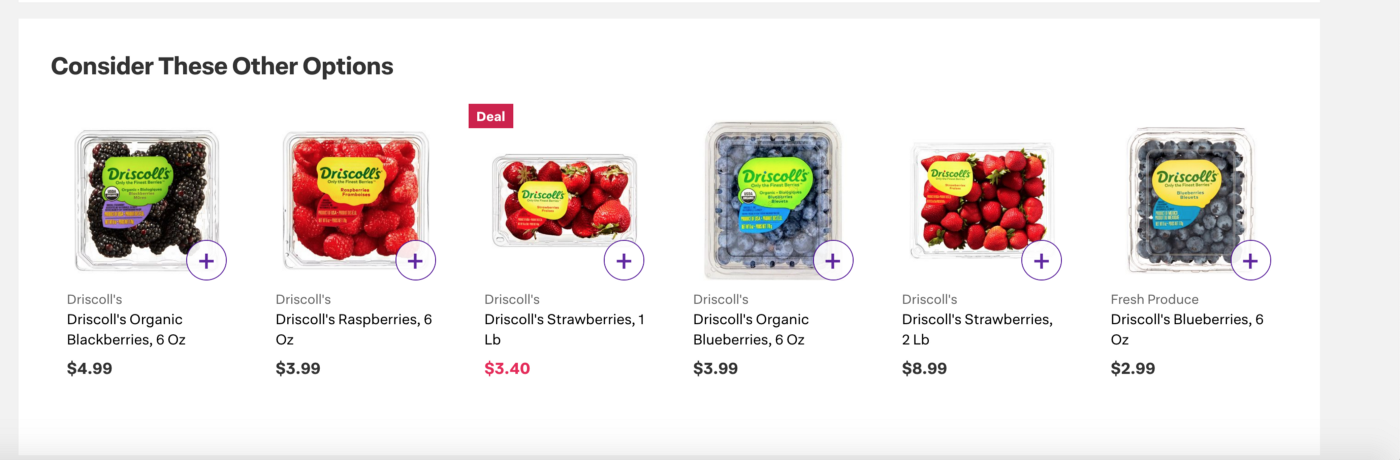

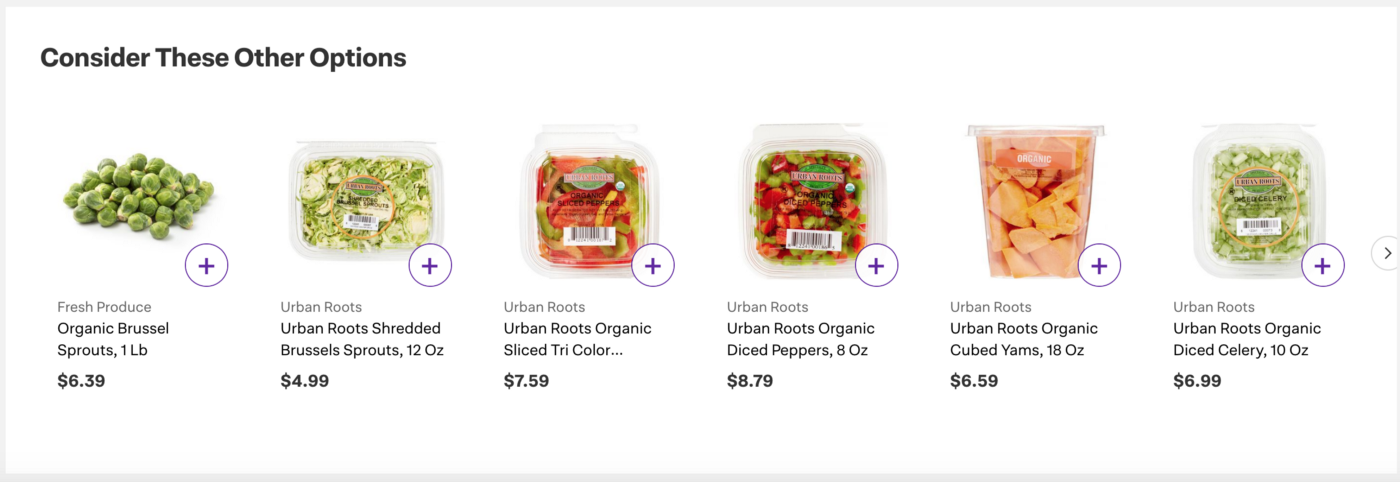

What does it look like?

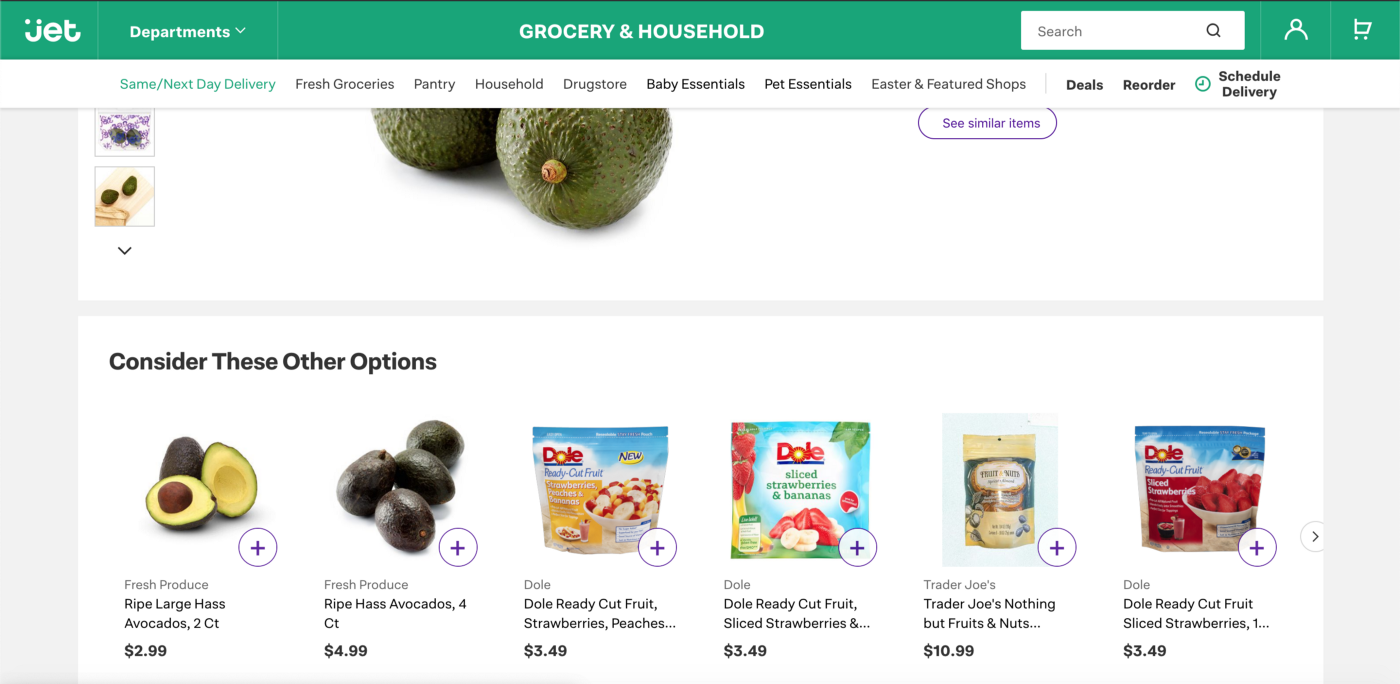

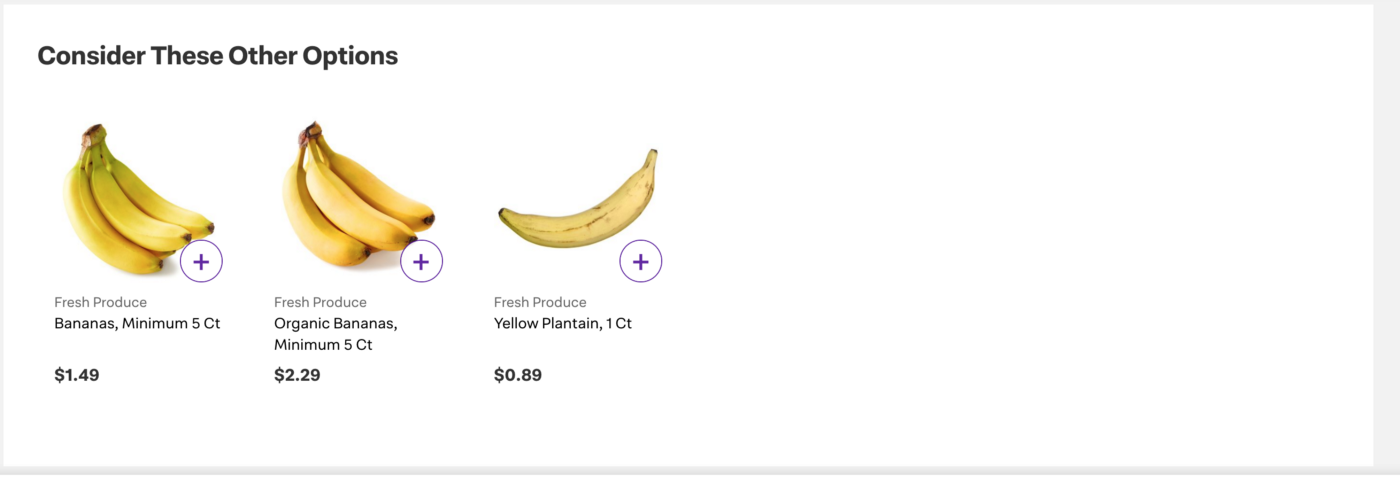

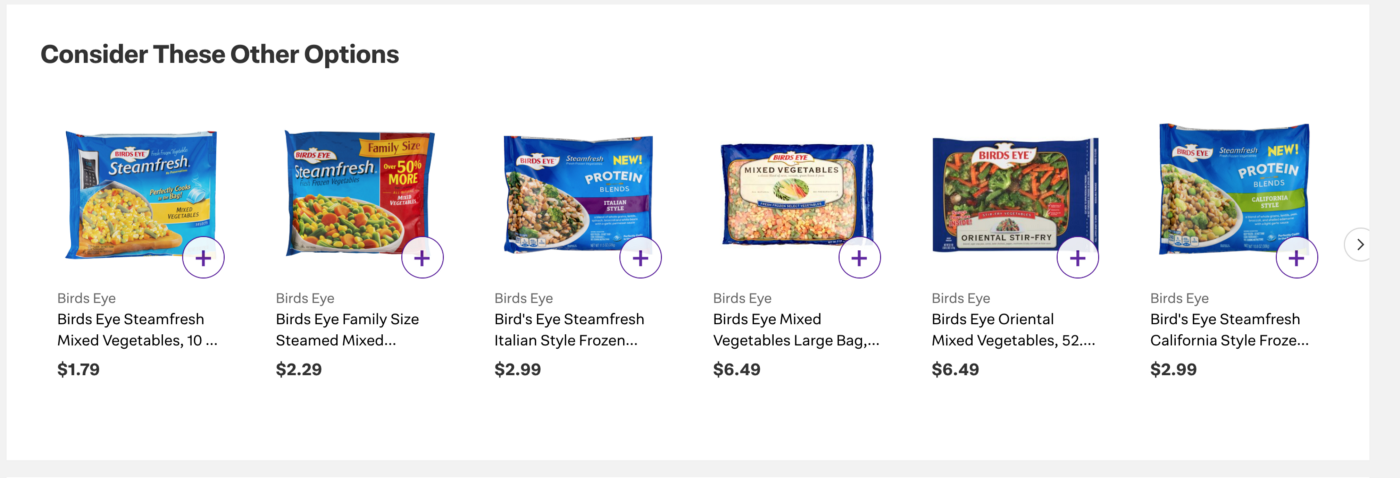

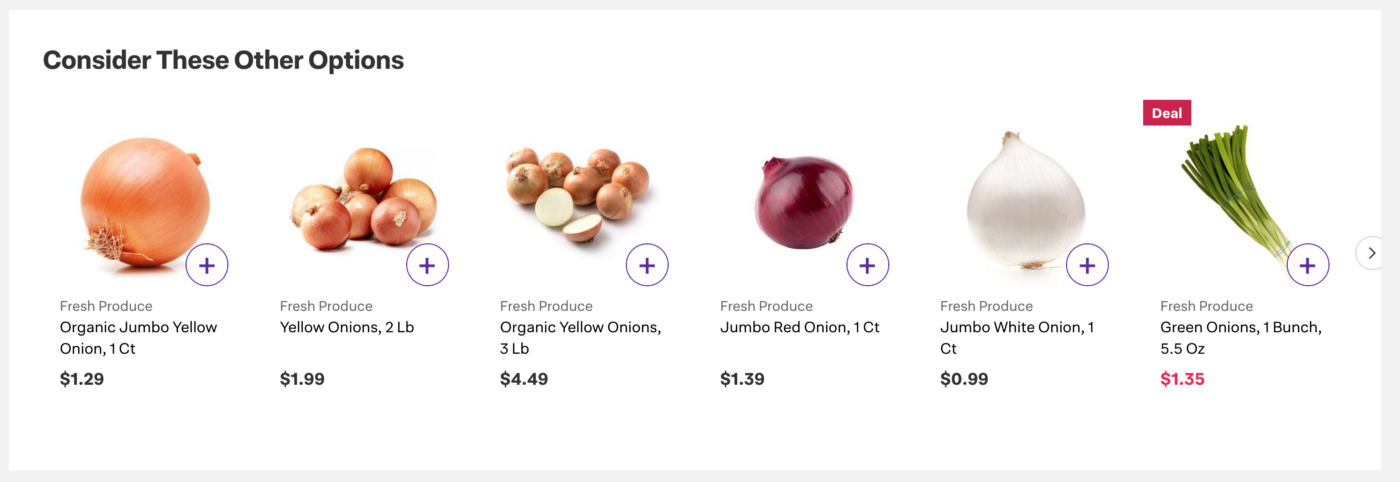

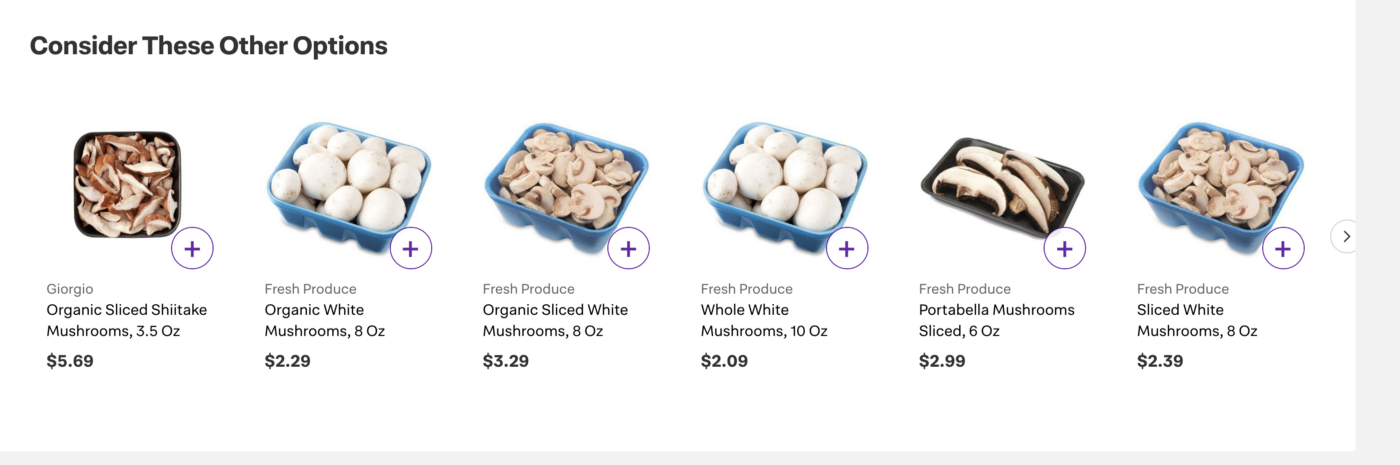

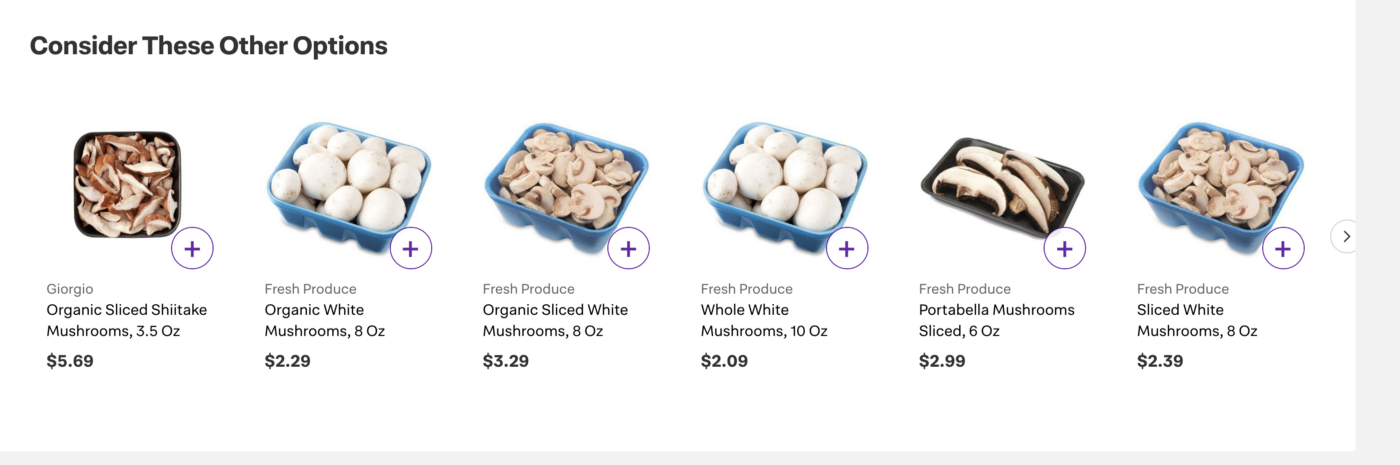

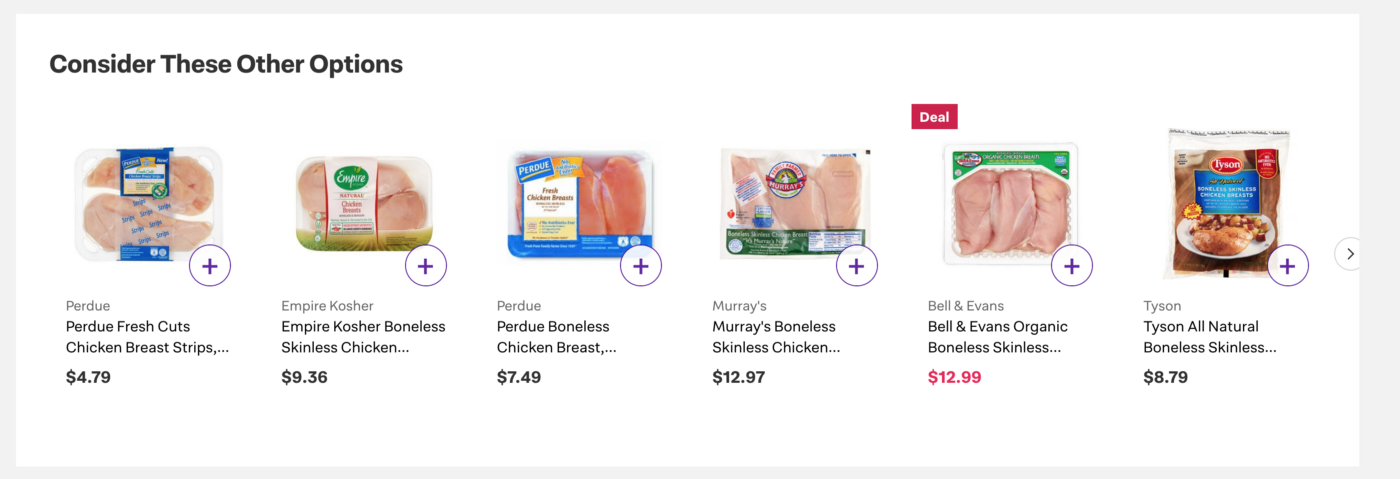

I would be remiss to not show what it looks like on the website. This module provides all of the recommendations under the title of Consider These Other Options.

For products like Blackberries, we have a small number of products (2) that we sell in the website. However, this does not prevent us from providing the customer with organic blackberries, then raspberries and blueberries. Note that the brand boosting becomes really good when brand focuses on a certain category.

After we provide two avocados, then provide various other fruits.

This example is great as we are sending the correct product types in the first two positions and because of word2vec, plantain and bananas are close to each other and we are able to provide plantain as the third recommendation in the list. There are other good examples in the following:

Moving Forward

- Elmo and BERT are two new vector models on top of the word which can incorporate contextual information around the words so the vectors of the words can be different based on the place that they appear.

- The Word2Vec model we used was a word-based model, and Elmo is based on character level. Because of this, it can handle out-of-vocabulary words very well.

- Poincare Embeddings can learn much lower dimensional embeddings by learning different types of relationships.

- We want to learn the weights on our catalog in a much faster fashion.