How to Serve Models

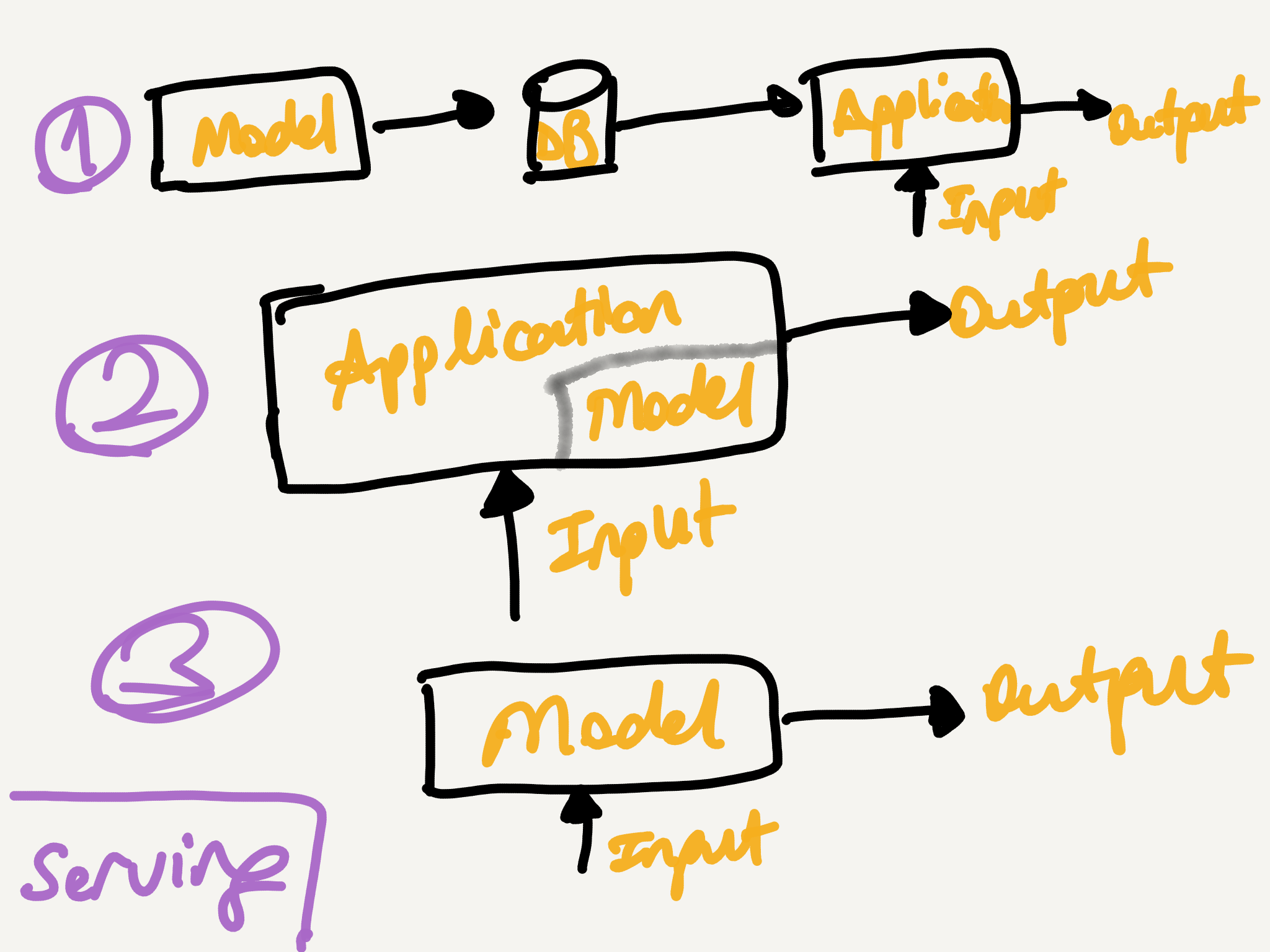

There are many ways to serve ml(machine learning) models, but these are the most common 3 patterns I observed over the years:

In this post, I want to go over these different architectures/patterns and then outline the advantages and disadvantages in a more objective manner. If you ask, where is the rant part, scroll down to the bottom of the post.

- Materialize/Compute predictions offline and serve through a database

- Use model within the main application, model serving/deployment can be done with main application deployment

- Use model separately in a microservice architecture where you send input and get output

In these 3 different approaches, while 3 might be more architecturally flexible and more modern in terms of microservice architecture, it is not a silver bullet solution as it requires and assumes infrastructure to be ready and able to support the flexibility of the architecture. While I believe this is the best architecture to be able to deploy, I also need to acknowledge other methods has their own advantages in comparison to the the 3rd architecture.

1, 2 and 3 can be considered as an evolution of the architectures as well. 1 is the fastest way to get something out of the door if the input domain is bounded and you want to serve the first model right out of the bat to be able to prove customer impact and business value of the model before investing a lot of operations and “making it right”. This way you incrementally evolve your architecture by delivering incremental values. However, if the input domains are not bounded, 2 approach might be a good compromise between different options. However, from maintainability and scalability perspective, 3rd provides the most benefits comparing to other two in terms of separation of concerns of training/model serving as well as easily be able to scaled up/down through traffic. Before further ado, let’s look at some of the advantages and disadvantages of each architecture.

1. Materialize/Compute Predictions

This is one of the earliest form of serving prediction architecture. A data scientist outputs SQL table and that table is ingested into production and served from a database from an application. Simple enough. Even though this architecture does not serve model directly, it has a number of advantages.

Advantages:

- It does not require any special infrastructure. A database that is well suited for production use cases can be enough.

- It does not require any infrastructure to deploy/serve model.

- A cron-job like mechanism can populate/update the database and this database can automatically serve predictions.

- It can serve predictions fast based on the database as the predictions are pre-computed.

- For mission critical applications, this might be very important and in some cases, it might be preferred mechanism.

- Even though the model that generates predictions might be slow or certain preprocessing steps might take longer time, this is hidden from the serving portion of the application.

- Rolling out and rolling back models become straightforward

- Whether engineer prefers to use separate tables or separate records based on some identifier, it is easy to rollback and rollout the new models through table name changes or record metadata changes.

- It decouples the main application from the predictions serving logic.

- Main application/API does not need to know about how the predictions are computed/materialized.

- Generating predictions can be a completely independent process from reading/serving predictions online.

- This can be an advantage if the technology stacks are different

Disadvantages:

- It requires all of the inputs aheads of time.

- For certain domains like search, this may not be feasible and realistic. As some of the queries that are being entered may not have been seen before.

- For features/datasets that are highly dynamic and has a large number of combinations, it will require large amount of storage space.

- Metadata, parameters need to be part of the table or records that have been inserted into database.

- For any type of A/B testing or online comparison, table records or table itself needs to somehow communicate to the application on the model specifications as the database itself becomes abstraction layer for model.

- It is hard to introduce new variables as it will exponentially increase storage and computation requirements.

- For domains like personalized search, it will require number_of_queries * total_number_of_users will be multiplied by each additional feature variations(like brand affinity).

- This is not easy to scale as each feature will require multiple same tables with the original one.

- For domains like personalized search, it will require number_of_queries * total_number_of_users will be multiplied by each additional feature variations(like brand affinity).

- It cannot leverage online features and online context to influence predictions.

- Since every prediction is precomputed, it cannot utilize online context and other features that might be useful at the time of prediction serving time.

- It is hard to update and maintain predictions through different models.

- A model update needs to either in-place update all of the records/tables or needs to create new records/tables.

2. Embedded Model

In this architecture, model is embedded into the main application. This architecture is a compromise between 1 and 3 where it enables not storing the predictions and still enables applications to serve predictions realtime. However, it tightly couples the serving layer with the main application which increases overhead in maintaining and operating both model and application itself. Since in this architecture the model and application is coupled, it gives a benefit of not having the network call.

Advantages:

- There is no network call on model predictions

- The model is probably loaded into memory within the application and it will be a function call.

- This improves serving latency.

- Use the same technology stack with model

- Same engineers that are working on the main application can easily make changes through the same stack.

- If library and framework of the machine learning library is also the language of the application, the integration between model and application would be easy and out of the box.

- There is no separate infrastructure that is needed for model specifically

- Model can use the same application infrastructure, release and deployment process

- This reduces the operational overhead for model even though it adds incremental overhead to the application.

Disadvantages:

- It is not scalable

- If your main application needs to use more than 1 model, all of these models need to be part of the main application and that creates a lot of maintenance overhead for software engineers that are maintaining and building this application.

- Addition of more than X number of models may require a lot of memory and might put additional constraints on the hardware that the application can be deployed.

- Has to use same technology stack with model

- The model needs to be at least deserialized by the application to be loaded into memory. This might create additional overhead on the deserialization as well as the model representation in that particular language stack. An example would be of Tensorflow/PyTorch being used in JVM stack. Both of these libraries expose a JNI interface: TF JNI and PyTorch JNI but there is a certain additional overhead back and forth of the model output to the JVM layer.

- Some of the language stacks that are very commonly used in the software development may not have any support from ml libraries and ml community in general such as GoLang and Rust. This may require in-house development for deserialization which might be error prone.

- Couples model deployment and development of main application

- This creates maintenance overhead and technical debt over time

- If there is a problem in model deployment and serving, that might impact the overall main application’s SLA. This creates reliability issues overall in the main application

- Model deployment and release need to depend on main application deployment. Main application deployment also depend on the model deployment

- Making changes in model and application need to accommodate both changes. This prevents easy rollback of a new model as it requires rolling back of the overall application together.

3. Microservice Model Serving

In this architecture, model is being served as a separate microservice independent from the main application. This architecture being the most flexible in terms of model deployment has also advantages and disadvantages.

Advantages:

- Model deployment and release is very flexible

- An ml engineer can deploy different strategies to be able to deploy these models.

- Canary deployment as well as gradual multiple phases deployment is possible and easy.

- Online monitoring and validation can happen per model in the rollout process.

- Generates and serves prediction in real-time and online.

- It can leverage online features and context to influence predictions.

- It can easily incorporate new features on the serving time.

- It can generate predictions on the data that has not been seen before. Based on the model specifications, it can also handle Out Of Vocabulary(OOV) situations where other rules in an offline computation cannot handle.

- Since serving can be done outside of the main application, this gives a lot of flexibility on the serving layer.

- Serving layer can be built and maintained completely separately from the main application.

- Serving layer can be deployed independently from the main application.

- Serving layer can change and be updated independently form the main application.

- Serving layer can utilize a completely different technology stack from the main application. This is especially important if your main stack uses a language that is not commonly adopted in machine learning community like GoLang or Rust.

- Decouples main application and model serving layer

- It separates all of the application and model serving concerns separately and results in very low technical debt within the application

- Scalable

- Model serving layer can be scaled out independently from the main application

- Since models are being served in separate microservices, adding additional models is adding new containers/services that can be scaled up separately.

- Based on the model prediction output usage, the serving layers can be parallelized at the time of prediction call.

Disadvantages:

- In order to fully utilize the flexibility of the approach, it requires separate infrastructure.

- Monitoring as well online validation and evaluation metrics need to be part of the serving layer

- The model as well as the API needs to be high performant and low latency prediction system.

- This requires engineers to optimize the prediction layer or use some off-the shelf solution like Tensorflow Serving

- It requires separate service deployment and release

- This will create additional overhead in terms of operations.

- This needs monitoring, alerting and production readiness of the models and services separately.

Conclusion

Whenever I see the pre-computed, materialized model serve pattern, I cringe. Even in the best case scenario that you completely separate the tables between separate models, you still need to make sure that every single model iteration outputs a full fledged table for the inputs that you know. This is on top of the obvious limitation of, you cannot do anything in a very dynamic manner within the application. It also shows that the engineering organization is not mature enough that they cannot serve model in a production capacity for one way or another unless they opt-in this pattern for a very specific reason.

The microservice model is the one that everyone should aspire to even though you do not get it right in the first try, it will be worth it. This not only separates concerns of ml with the main application, but also empowers ml engineers to build the system end to end and make necessary adjustments in an isolated manner. If you want to use a different library or framework, go ahead; as long as you comply the SLA, you should be able to do anything you want. If you want to deploy a new model but do not have support versionining, use API versioning to distinguish between models. Do you want to do canary deployments as you do not know the performance of the model in an online setting, you can use the API canary deployment that you DevOps team built with no problem. If you cannot do microservice model, embedded model would be a good compromise.