How to Monitor Models

After we serve machine learning models, we will also need to monitor them. In this post, I will be going at details of monitoring machine learning models.

Needless to say, monitoring is very important part of the machine learning based applications and services. On top of the existing/usual concerns for any type of software based systems, there are also unique challenges that ml brings to the table in terms of monitoring, two of these unique issues are:

- It is hard to find out if model deteriorates in production

- It is hard to control and create a stable environment where you can make assumptions in the inputs to the system

If you compare this with non-ml based applications, the difference and change are data, model characteristics and how model behaves. This is only being exacerbated by the time that model is being put and serving real customer traffic in production, it starts deteoriating gradually as the training/validation set being used to tune the parameters of the model differs over time with the production dataset.

Due to all of these needs, one needs to put a lot of more thought building ml based systems and that requires even more work on monitoring aspects of the applications/services.

The two questions that will outline the monitoring concerns are in the following:

How do I know my models perform well/worse?

- Is there any business metric that you can track for everyhing else being equal(ceteris paribus), it will signify that machine learning model is performing better?

If there is one, deterioriation of the model will be measured against a business/customer impacting metric. This is also good metric to measure across the site to understand downstream effects of the model.

How do I know if my model is down?

This is a better studied question and it is relatively easy to answer. We define SLA and certain operational metrics around the service/API that is serving the model/predictions and measure against the SLA.

I will answer both of these questions in a much more detailed manner in this post, but let’s first go with what we need to do accommodate change that is crucial part of the ml based systems.

Change

Unlike software systems, where the change is more often than not root cause of the disturbance in the system, ml systems behave quite differently. The inputs to the system which defines on how your machine learning based application works, changes over time. It is your best interest to adjust/change the model to the changing world all the time.

In software non-ml based systems, you can do property based testing and all of the other advanced testing methods to test your software against possible inputs. Because of that, change carries a risk and code freeze aims to reduce change in order to increase reliability. Especially, if you have intervals/windows that are mission critical like holiday season if you are in ecommerce space, this increases stability of the system and it is considered to be best practice for software systems.

In ML based systems, this is simply not possible and not best strategy to adopt ever changing data and consumer needs. As data input changes as well as the behavior that you are seeing that changes, you need to change the model, training data as well as other components that respond to those changes.

If you were to capture all of the possible input and output relations in a deterministic manner in terms of testing, you do not need an ml model in the first place.

The reason why you are building machine learning based applications is to generalize all of the inputs that have not been seen before and this is why ml exists but also makes it hard to test.

Change Anything Changes Everything

In Google’s seminal paper, the concept of CACE(Changes Anything Changes Everything). If you are adding a new field in the form where you collect data, be mindful of this principle. If you are using failed requests to a system as negative samples, you may have a hard time when there are a lot of bad actors that want to log-in to the system. If you are removing one analytics beacon data from existing data pipeline,

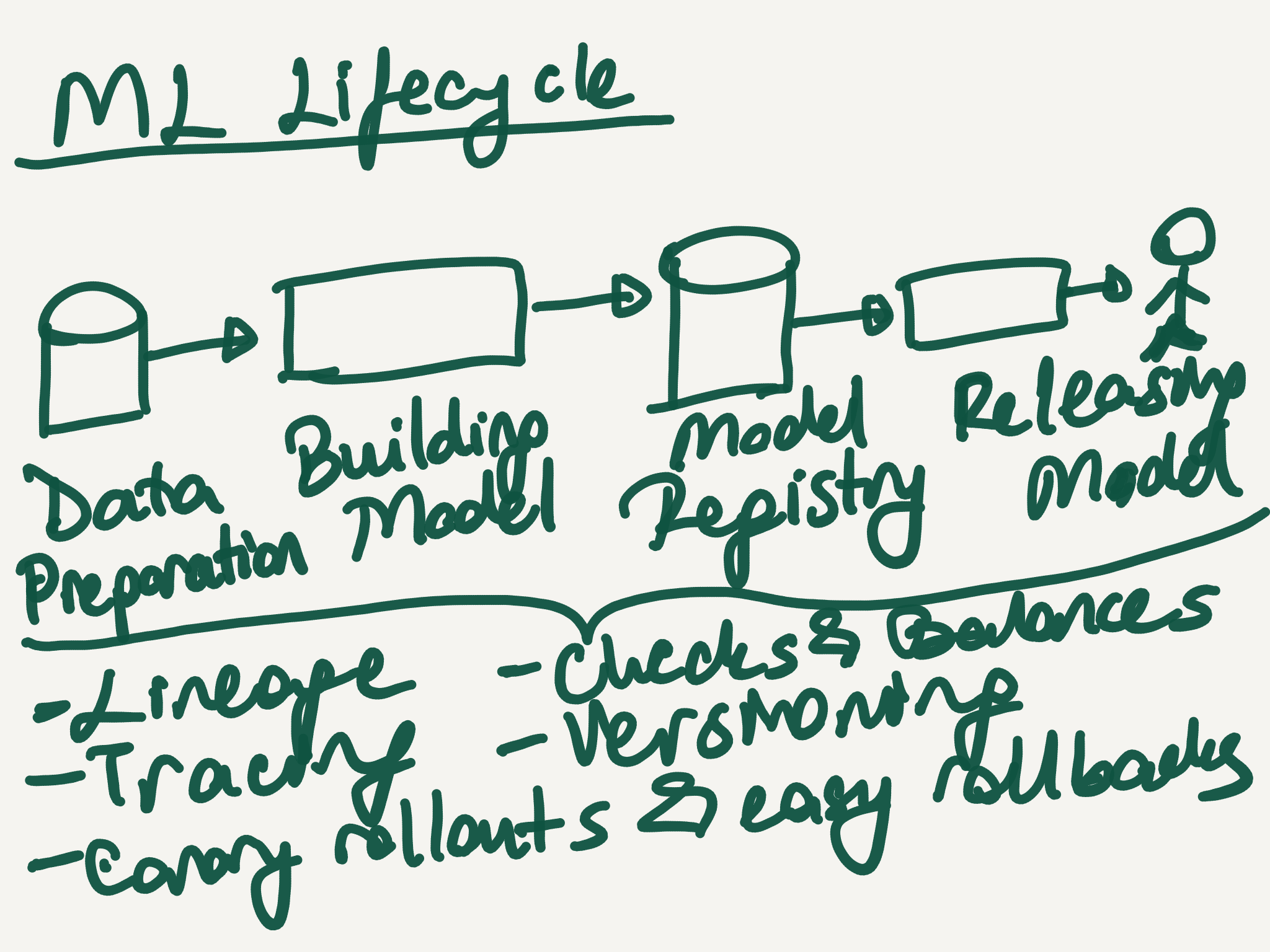

Data Pipeline/Preparation

Monitoring of the models should start on the data level as this part will have a significant impact on the model performance. Also, this might be a place where you can get best of return of investment as in this layer, ETL(extract-transform-load) can be monitored and alerted on basic checks. Since there is a large amount of dependency for features, model training on the basic data preparation, you need to make sure that all of the issues/errors can be monitored properly and alerted.

Some common things to monitor:

- To monitor the data input distribution

- To monitor basic data size per minute, hour and daily

- To monitor data schema

- Are there any new fields added?

- Are there any fields that are being removed?

- To monitor anomalies on the field level or on the record level

- To monitor novel fields

- Is there anything that you observe where you did not observe before?

- To monitor fluctuations on the data fields

- Basic mean, median computation on numerical fields and monitoring this over time would be simple and ensures that you do not miss these changes

- For categorical variables, histogram might be useful to understand if there is large change.

- To monitor lineage of the data

- Where does data come from? When is it originated?

- Which service and application produced this data?

- Which services and applications processed this data?

If you are using a streaming based solution to ingest data, you might have a better luck in terms of lineage of the data as long as you establish a mechanism to ensure that metadata is preserved and communicated to the downstreams.

Features

If you explicitly use features in the data pipeline/offline model training as well as in the serving layer, this is another area one needs to put monitoring. If your serving layer and data processing/pipeline layer do not share the similar technology stack, that is another reason why this place needs to be monitored closely with possibly some alerts.

Some more areas that need to be alerted:

- Data pipeline features and serving features should match to each other for a given input data.

- If serving layer and data pipeline/preparation is using different data sources to derive features, this is crucial. If they are using the same data sources, it would be good to have to ensure that libraries/frameworks that derive features match on serving and data pipeline layer.

- Feature distributions need to be monitored and put alerts on it. Especially, if there are issues in the features on the model building and/or serving layer, one side may not be able to use the features and assuming that model is able to handle null or not available values gracefully, it will be a silent error.

Training

For model training, we not only need to make sure that upstream data and features are guarded but also the model training needs to be monitored. In here, the very basic things like the mean, standard deviation as well as other statistical properties of prediction in evaluation and test set would be useful.

Some common things to monitor in this:

- Confusion Matrix if model is a classification model

- RMSE if model is a regression model

- Loss change over epochs

Serving

Unlike software systems, machine learning systems need more monitoring. The reason for that is that software systems need to answer questions around SLA which can be measured on uptime as well as SLA violation. However, ML Systems need to answer more questions than software systems around model itself on the serving layer.

Important Operational Metrics

On serving layer, one can look at the usual suspects of the systems:

- Resources Used(CPU, Memory, IOPS, GPU)

- Latency

- Errors and error rates

- System Capacity

- Authentication

- API metrics

Model Quality Metrics

If we cannot measure the model quality through a well-defined business/customer metric, one might come up a metric that shows/signified how good the model is in the production differrently.

There are many ways to do look at the model quality metrics:

Note that, in these metrics, we are not trying to measure the accuracy of the mpdel, but also the distribution of the input data as well as distribution of output of the model. For example, input distribution change causes well-known issue of concept drift. Ideally, we should build guards against these type of problems/issues beforehand. Every model since it has been put into production suffers from concept drift. This is because the training data used to train model differs from online data. This is bound to happen as the time of the model has been put into production, the model is expected to degrade.

Data Growth Strategy

As you are building different features, your customer base will grow and data ingestion and processing will increase as well. Monitor this growth from infrastructure perspective. Ask the following questions:

- How many more nodes do you need with increasing traffic?

- How much you can parallelize this workload?

- With this growth, do you need many more machines or do you need to rethink the overall data infrastructure in 2-3 years?

- Can you ingest the higher amount of data and train models without any issues?

- In 6 months/1 year/3 years, is memory going to be an issue or are you able to meet the SLAs for training freshness with the data growth rate?

- Do you have a plan to accommodate the data growth in the ml training pipeline?

User Behavior Change Strategy

In case there is a drastic user behavior change due to exogenous factors, we should have a plan to monitor such events and react to that. Some useful questions are:

- What happens when a pandemic happens and suddenly all of the products that you sell become out of stock?

- What happens when user starts buying certain categories more and more(like everyday living, produce and frozen foods) rather than fashion/home categories?

Best Practices

- Monitor output prediction and output prediction distribution is generally a good practice and it can point out problems very early on.

- Monitor lowest and highest probability of predictions

- Compare predictions with an older model and compare between current and older model.

- Use A/B testing to compare between different models based on some customer/business metric.

- Replay older production data to compare predictions.

- Shows/displays some other exogeneous factors.

- CoVID behavior changes can be observed through this mechanism.

- Shows/displays some other exogeneous factors.

- Monitoring infrastructure should be flexible and engineers should be able to create any type of alerts on top of it.

- Monitoring is there to be able to react to the monitoring and that is why alerts are for. There is nothing more sad than a monitoring infrastructure where engineers cannot create alerts on top of it.